Computers:

5 posters

Page 7 of 11

Page 7 of 11 •  1, 2, 3 ... 6, 7, 8, 9, 10, 11

1, 2, 3 ... 6, 7, 8, 9, 10, 11

Simultaneous maximization of both CR and CTR is Google's Achilles heel

Simultaneous maximization of both CR and CTR is Google's Achilles heel

http://esr.ibiblio.org/?p=2975&cpage=4#comment-297888

I have documented the algorithm in a private forum. And it seems a possible outcome would be to diminish Facebook's lack of anonymity, if not seriously impact Facebook's valuation, because would make it impossible to control what others see connected to any given page on the Web.

Estimate the impact on Android, especially vs. iPhone, if Google's ad revenue was declining?

Observe Google's primary Achilles heel being the often unattainable simultaneous maximization of both CR and CTR, or that paid and unpaid ads are not co-mingled. Eliminating this tension derives from a fundamental epiphany.

I have documented the algorithm in a private forum. And it seems a possible outcome would be to diminish Facebook's lack of anonymity, if not seriously impact Facebook's valuation, because would make it impossible to control what others see connected to any given page on the Web.

Last edited by Shelby on Sat Mar 05, 2011 6:03 pm; edited 1 time in total

Actors are not magic

Actors are not magic

http://lambda-the-ultimate.org/node/4200#comment-64604

Shelby wrote:

This is related to the prior posts I have made about why a computer can never challenge human creativity, and my recent posts about Continuum hypothesis. What Hewitt is forgetting is that the computer is a finite countable device, with finite number of programs, not a continuum process like the human brain or other natural processes in the universe.

Shelby wrote:

Carl Hewitt, PhD MIT wrote:Arbiters can be constructed so they are physically symmetrical and then strongly isolated from the environment. So it is hard to imagine a source of bias.

Coase's Theorem

Any artificial barrier ultimately fails. For example, your arbiter doesn't have infinite memory and thus there is bound to how much it can buffer while waiting to confirm delivery of the data it arbitrates.

Bottom line is the data and control flow of the algorithm has to be concurrent, and no amount of STM, Actor, other hocus pocus will magically make it so.

Actors provide a low-level model of concurrency, but there still need to be high-level concurrent programming paradigms on top of that model, else the low-level model will fail.

This is related to the prior posts I have made about why a computer can never challenge human creativity, and my recent posts about Continuum hypothesis. What Hewitt is forgetting is that the computer is a finite countable device, with finite number of programs, not a continuum process like the human brain or other natural processes in the universe.

Importance of Higher kinded generic types

Importance of Higher kinded generic types

http://www.codecommit.com/blog/ruby/monads-are-not-metaphors/comment-page-2#comment-5336

Shelby wrote:

Shelby wrote:

Scala's higher-kinded types enabled the paradigm of Haskell's type classes, as Daniel did with Monad. See section 7.2 Encoding Haskell’s type classes with implicits of Generics of a Higher Kind.

Notably section 6.1 Implementation explains why it may be difficult (if not impractical) to implement higher-kinded types for languages without type erasure, such as .Net languages, e.g. C#.

Are variance annotations useless (or only useless to Haskell programmers)?

Are variance annotations useless (or only useless to Haskell programmers)?

http://blog.tmorris.net/critique-of-oderskys-scala-levels/#comment-70483

Shelby wrote:

Tangent: I found Edward Kmett's attempt to add variance annotations to structural typing Monad implementation strategy. I still think my proposed subtyping strategy may be superior.

Well Tony Morris proved himself to be lacking integrity, because he had replied to the above saying basically that I was a confused idiot, that he is using inheritance of "an interface", that he was emulating Haskell's ad hoc polymorphism, and he didn't know why I mentioned "duck typing", so I made the following replies. So then he deleted his reply and deleted both the reply above and the other two below. I can see all three of these replies now waiting moderation, when the one above had already been publicly displayed on his blog, and he had already replied calling me an idiot. So this shows the man can not accept when he has been proven wrong. To censor rational debate is the antithesis of a search for knowledge. Note Tony Morris has a history of being obstinate, you can review his replies and topics to others in the threads mentioned in this post as evidence, also this thread full of obscenities, and also his comments in this thread by Gilad Bracha, one of the designers of Java.

http://blog.tmorris.net/critique-of-oderskys-scala-levels/#comment-70509

Shelby wrote:

http://blog.tmorris.net/critique-of-oderskys-scala-levels/#comment-70512

Shelby wrote:

Here is screen capture as evidence.

==================

Another Thread

==================

We see the same pattern from Tony Morris here. He had approved the first comment below, he had replied saying I was an idiot and that I needed to better learn my "stupid language Java". Now he removed his reply and both of my comments have disappeared from his blog, except that I can see they are "awaiting moderation". I know how WordPress works, this means he banned me from posting to his blog and retroactively moved all my comments back to spam. This clear evidence is he deleted his reply. Unfortunately I did not think to capture his reply before he deleted it.

http://blog.tmorris.net/java-is-pass-by-value/#comment-70462

Shelby wrote:

http://blog.tmorris.net/java-is-pass-by-value/#comment-70510

Shelby wrote:

Here is screen capture as evidence.

=================

One More Thread

=================

This post had appeared publicly on his blog, and now banished back to moderation as shown in the screen capture below.

http://blog.tmorris.net/applicative-functors-in-scala/#comment-70507

Shelby wrote:

Shelby wrote:

Tony Morris, author of Scalaz library wrote:First, I will get a couple of nitpicks out of the way. Martin’s L2 specifies that variance annotations will be used at this level. I (we: scalaz) propose that there is another level again, where you come to the realisation that variance annotations are not worth their use in the context of Scala’s limited type-inferencing abilities and other details. Instead, prefer a short-hand version of fmap and contramap functions.

Could that be because in Scalaz you are implementing the monad "bind" and "map" as duck typing type classes (the Haskell way), instead of as a supertype interface (trait) of the monad subtype, as I proposed here.

Or here for a more readable version.

Duck typing breaks Liskov Substitution Principle.

Of course Haskell doesn't have subtyping and uses duck typing exclusively. This of course violates SPOT and separation-of-concerns which impacts on the Expression Problem, code readability, and maintenance. Extension by duck typing can break existing code, when there are unintended collisions.

Composability is very important, but doesn't exist without referential transparency, which of course Haskell has and Scala does not enforce.

I haven't had time to study your Scalaz versus the Scala standard libraries, so I can comment on the relative merits.

Tangent: I found Edward Kmett's attempt to add variance annotations to structural typing Monad implementation strategy. I still think my proposed subtyping strategy may be superior.

Well Tony Morris proved himself to be lacking integrity, because he had replied to the above saying basically that I was a confused idiot, that he is using inheritance of "an interface", that he was emulating Haskell's ad hoc polymorphism, and he didn't know why I mentioned "duck typing", so I made the following replies. So then he deleted his reply and deleted both the reply above and the other two below. I can see all three of these replies now waiting moderation, when the one above had already been publicly displayed on his blog, and he had already replied calling me an idiot. So this shows the man can not accept when he has been proven wrong. To censor rational debate is the antithesis of a search for knowledge. Note Tony Morris has a history of being obstinate, you can review his replies and topics to others in the threads mentioned in this post as evidence, also this thread full of obscenities, and also his comments in this thread by Gilad Bracha, one of the designers of Java.

http://blog.tmorris.net/critique-of-oderskys-scala-levels/#comment-70509

Shelby wrote:

Exactly as a I expected, I am looking at your code here, and instances of List[T] do not inherit from Monad[M[_]]. Instead you are using implicits to simulate inheritance using structural typing (a/k/a duck typing, “if it quakes like a duck, it must be a duck”). This is the Scala way of emulating Haskell’s type class, see section 7.2 Encoding Haskell’s type classes with implicits of Generics of a Higher Kind. The link I provided in my prior post using the similar construct, so apparently you didn’t bother to click it.

There is no distinction between ad-hoc polymorphism and structural typing w.r.t to the point I was making about extensibility issues.

You are confused and rude.

http://blog.tmorris.net/critique-of-oderskys-scala-levels/#comment-70512

Shelby wrote:

I see my rebuttal is awaiting you to release it from moderation.

Given my rebuttal, my original point remains, which is that of course you don’t get any benefit from variance annotations when you structure your inheritance using implicit to emulate Haskell’s type class, instead of the subtyping way I proposed in the link of my first comment.

You are apparently trying to do Haskell in Scala, instead of leveraging Scala’s subtyping strengths, no wonder you are so frustrated and lashing out. Scala can’t do Haskell’s strengths, because Haskell doesn’t have subtyping, thus I understand that type inference is much simpler in Haskell.

Here is screen capture as evidence.

==================

Another Thread

==================

We see the same pattern from Tony Morris here. He had approved the first comment below, he had replied saying I was an idiot and that I needed to better learn my "stupid language Java". Now he removed his reply and both of my comments have disappeared from his blog, except that I can see they are "awaiting moderation". I know how WordPress works, this means he banned me from posting to his blog and retroactively moved all my comments back to spam. This clear evidence is he deleted his reply. Unfortunately I did not think to capture his reply before he deleted it.

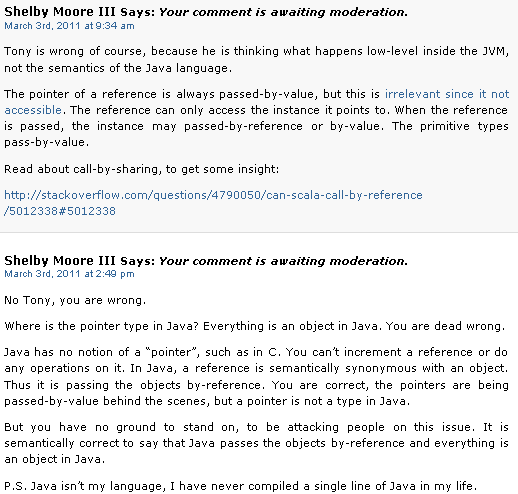

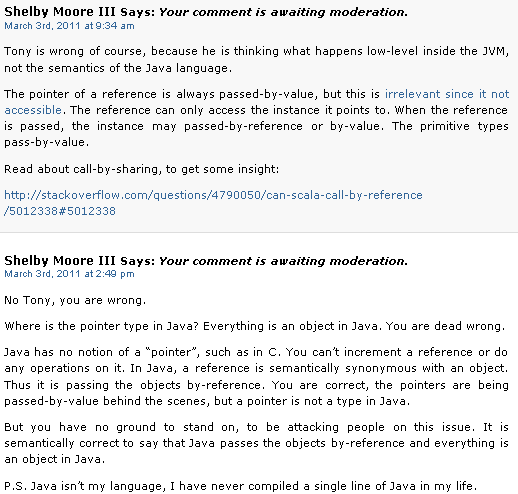

http://blog.tmorris.net/java-is-pass-by-value/#comment-70462

Shelby wrote:

Tony is wrong of course, because he is thinking what happens low-level inside the JVM, not the semantics of the Java language.

The pointer of a reference is always passed-by-value, but this is irrelevant since it not accessible. The reference can only access the instance it points to. When the reference is passed, the instance may passed-by-reference or by-value. The primitive types pass-by-value.

Read about call-by-sharing, to get some insight:

http://stackoverflow.com/questions/4790050/can-scala-call-by-reference/5012338#5012338

http://blog.tmorris.net/java-is-pass-by-value/#comment-70510

Shelby wrote:

No Tony, you are wrong.

Where is the pointer type in Java? Everything is an object in Java. You are dead wrong.

Java has no notion of a “pointer”, such as in C. You can’t increment a reference or do any operations on it. In Java, a reference is semantically synonymous with an object. Thus it is passing the objects by-reference. You are correct, the pointers are being passed-by-value behind the scenes, but a pointer is not a type in Java.

But you have no ground to stand on, to be attacking people on this issue. It is semantically correct to say that Java passes the objects by-reference and everything is an object in Java.

P.S. Java isn’t my language, I have never compiled a single line of Java in my life.

Here is screen capture as evidence.

=================

One More Thread

=================

This post had appeared publicly on his blog, and now banished back to moderation as shown in the screen capture below.

http://blog.tmorris.net/applicative-functors-in-scala/#comment-70507

Shelby wrote:

Can’t you use (None : Option[Int]) in place of none? Seems I read somewhere that Scala has that compile-time cast for aiding type inference.

Last edited by Shelby on Fri Mar 04, 2011 9:42 pm; edited 16 times in total

Applicative functor

Applicative functor

http://www.codecommit.com/blog/ruby/monads-are-not-metaphors/comment-page-2#comment-5338

Shelby wrote:

http://www.codecommit.com/blog/ruby/monads-are-not-metaphors/comment-page-2#comment-5341

Shelby wrote:

http://www.codecommit.com/blog/ruby/monads-are-not-metaphors/comment-page-2#comment-5343

Shelby wrote:

There is a fascinating discussion about the practical utility of monads for mainstream programming. That discussion morphs from an initial hypothesis that monads vs. actors can be used to interopt imperative and functional programming, to one of recognition that monads provide abstraction of widespread programming paradigms, e.g.

http://gbracha.blogspot.com/2011/01/maybe-monads-might-not-matter.html?showComment=1295992148701#c6727611476735111271

Perhaps an even more widely used abstraction is the Applicative, which is a supertype of a pure monad:

http://gbracha.blogspot.com/2011/01/maybe-monads-might-not-matter.html?showComment=1295998124929#c138376235115429371

http://blog.tmorris.net/applicative-functors-in-scala

http://blog.tmorris.net/monads-do-not-compose/

It might not be easy for some to grasp Applicative from that research paper, and I have a layman's concise tutorial, but I will wait to provide a link once my implementation is complete, because the tutorial uses my syntax. I promise it won't be incomprehensible for Java laymen.

Applicative is a neat pattern, because it allows the application of a function distributed (curried) over the wrapped elements of a tuple of Applicative, and unlike Monad#bind, the function is always called and can not be short-circuited by the computation and/or state of the wrapping.

However, and the main reason I make this post here, is that an empty container is incompatible with Applicative, if the emptiness is not a type parameter of the container, because the empty container can not provide an empty element to the function application, if the function is not expecting it (i.e. does not accept an empty type). This means that Nil for an empty List type is incompatible with Applicative. Only List[T] extends Applicative[Option[T]] will suffice. In my prior comments on this page, I had mentioned I expected problems with Nil and prefer to use Option[T] return type on element access to model an empty container.

The prior linked research paper seems to conflict with my assertion, wherein it shows how to implement the Applicative#ap (apply) for monads on page 2, and the statements that every monad is an Applicative in section 5 and conclusion section 8. However, what I see is that although the Monad#ap can return the same value as the Applicative#ap, the side-effects are not the same. The Applicative#ap will always call the input function (with empty elements as necessary) and the Monad#ap will return an empty value and short-circuit calling the function. The research paper alludes to this in discussion of the differences in evaluation tree in section 5. The research paper I think is coming from the mindset of Haskell ad-hoc polymorphism (i.e. tagged structural typing) where there is no subtyping, thus Monad#ap is not expected to honor the semantic conditions of the Liskov Substitution Principle, i.e. Monad is not a subtype of Applicative in Haskell. This is real-world an example of why I am against ad-hoc polymorphism and structural typing. Of course, side-effects are not supposed to matter in a 100% pure language such as Haskell.

Any way, back to the point that Nil is incompatible with Applicative side-effects semantics (when either the container is not monad or Applicative#ap does not always input a pure function to apply), perhaps this might be one example of Tony Morris's (author of Scalaz) criticism of Scala's libraries, given that in Scalaz apparently Monad is a subtype of Applicative. I am not taking a stance yet on the general criticism, because my library implementation is not complete, so I do not yet know what I will learn.

http://www.codecommit.com/blog/ruby/monads-are-not-metaphors/comment-page-2#comment-5341

Shelby wrote:

@Horst

Correction: I have just clarified in my linked documentation translated as follows.The semantics of Applicative[T]#ap do not allow a subtype which is non-invertible to an unwrapped T, i.e. empty container not allowed, unless there is a subtype of the subtype (or a subtype of T) that represents the null state (e.g. the subtype, or T, is an Option type).

Perhaps that is implicit in the four laws for Applicative. I will check that next.

Thus I think Applicative#ap can implemented for an empty container using Nil in Scala, by returning a (Nil : List[B]), where I assume that is the correct Scala syntax for compile-time cast of List[Nothing] (a/k/a Nil) to the expected return type B of the curried function.

Alternatively Applicative#ap could be implemented for an empty container using Option elements, by returning the wrapped curried function with (None : Option[B]). This requires every possible composition function to input Option. So now I am seeing some good justification for the Nil strategy of Scala's library.

Also studying the situation more, I realize there is no difference between the side-effects of the Applicative#ap and Monad#ap. I think I was conflating in my mind with Monad#bind.

So I no longer see any problem, nor a requirement for pure function per to solve it.

Hopefully I have helped explain Applicative and provide further insight in how to simplify the explanation of Monad.

Thus I don't yet know an example of why Morris is dissatisfied with the Scala libraries.

P.S. note in the linked docs in my prior comment, I am currently using Maybe as synonymous to Option.

http://www.codecommit.com/blog/ruby/monads-are-not-metaphors/comment-page-2#comment-5343

I still don't like Nil (extends List[Nothing]), because unlike None (extends Option[Nothing]), a Nil can throw an exception on accessing head or tail. In general for all programming, I want to always force unboxing and make throwing an exception illegal (for the scalable composition reason explained in an early comment on this page).

So I realized there is a third way to represent an empty list (or in general a container), which never throws an exception and I think it is the optimum one compared against the two alternatives I presented in the prior comment.

trait OptionList[+T]

case class List[+T] extends OptionList[T]

case object Nil extends OptionList[Nothing]

(or case class Nil[T] extends OptionList[T])

The point is that when you have a List, then it can't be empty and no need to unbox, and when you have an OptionList, you must unbox (i.e. match-case) it before you can access any members of List, e.g. head or tail. If you get a case Nil upon unboxing, then it has no members from List that would throw an exception in Scala's current Nil, e.g. head and tail.

Now I need to discover why Scala didn't do their Nil this way? Maybe there is an important tradeoff I am not aware of yet.

temporaryokay

temporaryokay

http://us.cnn.com/2011/TECH/gaming.gadgets/03/04/nintendo.apple.games/index.html?hpt=Sbin

Developers are going to need to reuse code and get paid across all the myriad of apps that use their snippets, in order to be able to have an economic ROI for programming.

The "everybody bakes their own from scratch" model doesn't work, when diversity explodes.

This is exactly what I envisioned and why I have been trying to find (and now creating) something like Copute since 2008.

Developers are going to need to reuse code and get paid across all the myriad of apps that use their snippets, in order to be able to have an economic ROI for programming.

The "everybody bakes their own from scratch" model doesn't work, when diversity explodes.

This is exactly what I envisioned and why I have been trying to find (and now creating) something like Copute since 2008.

Origin of the word "blog"

Origin of the word "blog"

1953 Dr. Seuss, but in context of sweet frogs:

http://www.bookofjoe.com/2005/03/the_origin_of_t.html

1997 as "weblog":

http://en.wikipedia.org/wiki/Blog

1999 as "blog":

http://groups.google.com/group/deja.comm.weblogs/browse_thread/thread/6c235f317e9967/dc5b0aea621df713?q=blogging&_done=%2Fgroups%3Fhl%3Den%26lr%3Dlang_en%26ie%3DUTF-8%26safe%3Doff%26num%3D20%26q%3Dblogging%26qt_s%3DSearch+Groups%26as_drrb%3Db%26as_mind%3D1%26as_minm%3D1%26as_miny%3D1999%26as_maxd%3D31%26as_maxm%3D12%26as_maxy%3D1999%26&_doneTitle=Back+to+Search&&d#dc5b0aea621df713

http://www.bookofjoe.com/2005/03/the_origin_of_t.html

1997 as "weblog":

http://en.wikipedia.org/wiki/Blog

1999 as "blog":

http://groups.google.com/group/deja.comm.weblogs/browse_thread/thread/6c235f317e9967/dc5b0aea621df713?q=blogging&_done=%2Fgroups%3Fhl%3Den%26lr%3Dlang_en%26ie%3DUTF-8%26safe%3Doff%26num%3D20%26q%3Dblogging%26qt_s%3DSearch+Groups%26as_drrb%3Db%26as_mind%3D1%26as_minm%3D1%26as_miny%3D1999%26as_maxd%3D31%26as_maxm%3D12%26as_maxy%3D1999%26&_doneTitle=Back+to+Search&&d#dc5b0aea621df713

Web 2.0 suicide machine

Web 2.0 suicide machine

Remove your data from every major web2.0 app:

http://suicidemachine.org/

Facebook sent them Cease & Desist order:

http://suicidemachine.org/download/Web_2.0_Suicide_Machine.pdf

Some people alledge that Facebook is thought to be cooperating with CIA to ID everyone in the world. The internet is a giant intelligence gathering operation, that is why the govt will never kill the internet.

http://suicidemachine.org/

Facebook sent them Cease & Desist order:

http://suicidemachine.org/download/Web_2.0_Suicide_Machine.pdf

Some people alledge that Facebook is thought to be cooperating with CIA to ID everyone in the world. The internet is a giant intelligence gathering operation, that is why the govt will never kill the internet.

Leveled Garbage Collection superior to GGC

Leveled Garbage Collection superior to GGC

http://lambda-the-ultimate.org/node/4220

Shelby wrote:

Note LtU censors all my submissions, so the above was submitted but probably never displayed.

Once we get pagedirt.com working, we can put an end to censorship on the web.

Shelby wrote:

I had suggested an idea for improving Haskell's handling of space "leaks", with a similar preference for paging fault load as the metric of promotion.

Note LtU censors all my submissions, so the above was submitted but probably never displayed.

Once we get pagedirt.com working, we can put an end to censorship on the web.

Last edited by Shelby on Tue Mar 08, 2011 4:15 am; edited 2 times in total

My "eliminate all exceptions" is beautiful code

My "eliminate all exceptions" is beautiful code

I couldn't be more ecstatic with the elegant, concise, and complete (no corner cases) way the code turned out:

http://copute.com/dev/docs/Copute/ref/std/SignedState.copu

Here are the links to my prior announcement of a major breakthrough, for which the code above is the implementation:

http://copute.com/dev/docs/Copute/ref/intro.html#Convert_Exceptions_to_Types

https://goldwetrust.forumotion.com/t112p135-computers#4249

http://copute.com/dev/docs/Copute/ref/std/SignedState.copu

Here are the links to my prior announcement of a major breakthrough, for which the code above is the implementation:

http://copute.com/dev/docs/Copute/ref/intro.html#Convert_Exceptions_to_Types

https://goldwetrust.forumotion.com/t112p135-computers#4249

How to launch Copute.com (not language) immediately as "cooperative coding" platform?

How to launch Copute.com (not language) immediately as "cooperative coding" platform?

I welcome frank feedback from anyone, I love feedback, even negative.

Copute.com is an awesome domain name, and it is not being used in a popular site yet. My CoolPage.com also has potential if I can ever find a great site concept for it.

As I had explained in prior posts in this thread, the open source programming model is designed to foster contribution and sharing, which has been much more successful than a closed source code model. The examples of open source defeating closed source are numerous. Firefox is better then Internet Explorer, MySQL is more popular than the closed source databases (Oracle, Microsoft SQL server, etc), Google Android is killing Apple iPhone and Microsoft phones and eventually will replace Windows.

A fundamental problem with open source is that there is no way for an individual contributor to get paid independently. You can't just go dig in and write some code snippets, upload them, and wait to get paid as they are used by the market. There is no such market and marketing for building blocks of code contribution. You instead have to go build a complete software product of sufficient scale and market it, something very few people in world can do successfully (this is why iPhone and Amazon AppStores are growing so phenomenally, but the individual programmer contributors are not even recovering their time invested, because of dozens or 100s of duplicate and silly applications). Imagine if it could be that simple and efficient to contribute to world economy and profit in your home office? Imagine all those unemployed (or underemployed in terms of pay, interesting projects, and satisfaction) millions of Chinese and Indian engineer graduates living at their parents house. To get paid to work on open source, you need to join a company that wants to pay you to work on open source. I wish I could change this, so that millions of individual contributions could be incentivized. I see huge untapped sources of programmers in India and China, who are highly underpaid and who don't gain enough experience because they are not exercising their abilities enough in a free market.

Originally I started Copute with a focus on making a better computer language that would interopt better technologically and thus foster more reusable modules of code. I am glad I did, as I learned a lot of new technology and made a lot of progress from Dec. 17 2010 to March 15 2011. I still think this is necessary, and I want to find a way to complete the Copute computer language concept. However, I realized that what the world probably needs first, is the free market to trade reusable code in. If such a free market become popular, the problems inherent in existing computer language reusability would become more apparent, and would drive demand for a new computer language such as Copute.

So I am now spending some time thinking about how such a free market might work. Remember originally I had proposed that all code would be profiled (meaning use would be tracked) and then contributions would be paid on % of CPU time used by their code basis. I have since decided this is a non-starter for numerous reasons. The world is rightfully resistant to use tracking (privacy and tethering issues), and dictating a relative price based on relative CPU use, is one-size-fits-all concept of value, which is the antithesis of a free market.

Issues:

This looks pretty easy and no brainer. Has no one already attempted this?

The closest I see so far is BountySource and Donations (both appeared to have failed), which allows some users to band together to offer a reward for improvements to code, which does not have the same force as my described model above:

http://en.wikipedia.org/wiki/Comparison_of_free_software_hosting_facilities#Features

http://en.wikipedia.org/wiki/BountySource

Reference: Mechanism Design to Promote Free Market and Open Source Software Innovation

Open-source economics

Copute.com is an awesome domain name, and it is not being used in a popular site yet. My CoolPage.com also has potential if I can ever find a great site concept for it.

As I had explained in prior posts in this thread, the open source programming model is designed to foster contribution and sharing, which has been much more successful than a closed source code model. The examples of open source defeating closed source are numerous. Firefox is better then Internet Explorer, MySQL is more popular than the closed source databases (Oracle, Microsoft SQL server, etc), Google Android is killing Apple iPhone and Microsoft phones and eventually will replace Windows.

A fundamental problem with open source is that there is no way for an individual contributor to get paid independently. You can't just go dig in and write some code snippets, upload them, and wait to get paid as they are used by the market. There is no such market and marketing for building blocks of code contribution. You instead have to go build a complete software product of sufficient scale and market it, something very few people in world can do successfully (this is why iPhone and Amazon AppStores are growing so phenomenally, but the individual programmer contributors are not even recovering their time invested, because of dozens or 100s of duplicate and silly applications). Imagine if it could be that simple and efficient to contribute to world economy and profit in your home office? Imagine all those unemployed (or underemployed in terms of pay, interesting projects, and satisfaction) millions of Chinese and Indian engineer graduates living at their parents house. To get paid to work on open source, you need to join a company that wants to pay you to work on open source. I wish I could change this, so that millions of individual contributions could be incentivized. I see huge untapped sources of programmers in India and China, who are highly underpaid and who don't gain enough experience because they are not exercising their abilities enough in a free market.

Originally I started Copute with a focus on making a better computer language that would interopt better technologically and thus foster more reusable modules of code. I am glad I did, as I learned a lot of new technology and made a lot of progress from Dec. 17 2010 to March 15 2011. I still think this is necessary, and I want to find a way to complete the Copute computer language concept. However, I realized that what the world probably needs first, is the free market to trade reusable code in. If such a free market become popular, the problems inherent in existing computer language reusability would become more apparent, and would drive demand for a new computer language such as Copute.

So I am now spending some time thinking about how such a free market might work. Remember originally I had proposed that all code would be profiled (meaning use would be tracked) and then contributions would be paid on % of CPU time used by their code basis. I have since decided this is a non-starter for numerous reasons. The world is rightfully resistant to use tracking (privacy and tethering issues), and dictating a relative price based on relative CPU use, is one-size-fits-all concept of value, which is the antithesis of a free market.

Issues:

- How do we make users pay? Any one distributing code from our system much use an installation program that interacts with our database. Or they must track installations and be willing to open books to 3rd party audit if we question their royalty payments. This is policed by the contributors of code to our free market system (this is why I prefer to prioritize client side applications first, not server side). The code will be copyrighted. Unlike for pirated music, there won't be much incentive to pirate our code, because the people distributing software products employing our code, will likely be giving away the software for free and making money via advertising and/or service subscriptions. The people who distribute the code (e.g. if Firefox uses some of our code one day, or Google uses it), will pay us for all their user activations. In other words, our business model is B2B (business-to-business) and we aim to employ thousands or millions of programmers. Note I think the license should state the installation program should ensure our database is incremented for each time a copy of the code is "installed" on a client computer, so for JavaScript browser apps that means setting cookie (or user signup if login is always required).

Philosophical tangent: Many people have argued against copyright, but they were actually arguing against closed source. The Bible says thou shall not steal. Jason Hommel who is against patents, says you can't give away something that isn't free, for free. That would be socialism, and it ends in failure. No one wants to use the courts to enforce copyright, but the Bible says that we get the government we deserve, so those who steal end up with a government to steal from them. - How to price code contributions? The contributor must set his/her price and be able to adjust it based on market results. We should probably offer several standard pricing models to choose from, but the contributor sets the pricing within the chosen model. For example, a model that might make a lot of sense if the code is free for the first say 1000 users, then a sliding price discounted by volume. This encourages adoption of the code and wide use, and even when the price kicks in, it only applies to the 1001th user, so the price is increasing rather slowly as divided by 1001, and then the volume discounts kick in. The point is that the price paid is a no brainer for the person who wants to utilize the code in their project, as by the time they scale, the price is negligible to their own profit model.

- How do we enable bug fix and refinement contributions? The contributor of the bug fix or refinement has to set a price as % or fixed $ that is added to the existing pricing of the code being fixed or refined. The market will choose whether to adopt this new code or not. The contributor of the original code will decide whether to lower his price to accommodate the new fix at the original price or not. Both contributors will be free to adjust their pricings as they observe what each other do. It is online negotiation proxy.

- How do we stop duplication or theft of code? This is not users getting access to code for free, but rather stopping contributions which are basically just ripoffs of existing contributions. If such ripoff is posted outside our system, we can use copyright law to go after it, if we feel it is blatant. If the ripoff is within our system, we will have an online reporting process, and these will be judged by experts. The reporter has to pay for this upfront, but if the accused loses, then the accused is liable to pay this in our system (we can deduct from his/her earning, etc). Outside our system, we will go after excessive abuses at our discretion, but the contributor may also go fight in the courts. Within our system, we are the final judge and the contributor waves rights to go to extern court. This will all be in our Terms of Service contract on signup.

- Why would anyone pay when so much free open source exists? You get what you pay for. If you want quality and well supported code, the person doing the coding needs to be earning an income. Most of that free stuff is not readily reusable as it is undocumented, virtually unsupported spaghetti.

This looks pretty easy and no brainer. Has no one already attempted this?

The closest I see so far is BountySource and Donations (both appeared to have failed), which allows some users to band together to offer a reward for improvements to code, which does not have the same force as my described model above:

http://en.wikipedia.org/wiki/Comparison_of_free_software_hosting_facilities#Features

http://en.wikipedia.org/wiki/BountySource

Reference: Mechanism Design to Promote Free Market and Open Source Software Innovation

Open-source economics

Law professor Yochai Benkler explains how collaborative projects like Wikipedia and Linux represent the next stage of human organization. By disrupting traditional economic production, copyright law and established competition, they’re paving the way for a new set of economic laws, where empowered individuals are put on a level playing field with industry giants.

What this picture suggests to us is that we’ve got a radical change in the way information production and exchange is capitalized. Not that it’s become less capital intensive, that there’s less money that’s required, but that the ownership of this capital, the way the capitalization happens, is radically distributed. Each of us, in these advanced economies, has one of these (grabs nearby computer), or something rather like it — a computer. They’re not radically different from routers inside the middle of the network. And computation, storage, and communications capacity are in the hands of practically every connected person — and these are the basic physical capital means necessary for producing information, knowledge, and culture, in the hands of something like 600 million to a billion people around the planet.

What this means is that for the first time since the Industrial Revolution, the most important means — the most important components of the core economic activities — remember, we are in an information economy — of the most advanced economies, and there more than anywhere else, are in the hands of the population at large. This is completely different than what we’ve seen since the Industrial Revolution.

So we’ve got communications and computation capacity in the hands of the entire population, and we’ve got human creativity, human wisdom, human experience — the other major experience, the other major input. Which unlike simple labor -stand here turning this lever all day long — is not something that’s the same or fungible among people. Any one of you who has taken someone else’s job or tried to give yours to someone else, no matter how detailed the manual, you cannot transmit what you know, what you will intuit under a certain set of circumstances. In that, we’re unique, and each of us holds this critical input into production, as we hold this machine.

Last edited by Shelby on Sat May 28, 2011 4:25 pm; edited 2 times in total

Android does not currently support Java applets (so no Scala in the browser)

Android does not currently support Java applets (so no Scala in the browser)

Also Scala -> JavaScript conversion links herein too:

http://code.google.com/p/android/issues/detail?id=7884#c8

However, Scala can be used to code Android applications.

http://code.google.com/p/android/issues/detail?id=7884#c8

However, Scala can be used to code Android applications.

Human language versus computer language writing & copyright implications

Human language versus computer language writing & copyright implications

Shelby,

If I understand correctly, I think you are trying to gain royalties on programming work, and help others get that too. I think that's probably the wrong model.

If I compare programming to writing, what's the Biblical model? Hard to say. Today's model requires copywriting protections, which I see as fraudulent and anti Biblical. Writing is not property. What if the gospel writings were property? They were, but only in the sense that the actual scrolls were costly to make and produce, and copies cost a lot of money to make.

So, yes, I gain from my writings, via the internet, since my writings stand and remain as long term search engine landing pages for people who may be interested in buying bullion.

All the time we get buyers who buy because they know I'm a Christian, and because they agree with what I've written online.

So, that is a form of residual, but it's free market based. There is a "back room" upsell, via jhmint, that makes it work.

Of course, I did this for free anyway, for years, with bibleprophesy.org, but it did not produce immediate fruit. In fact, the lack of the kind of fruit that a person needs to live drove me away from prophecy, and into precious metals.

Think of ways, instead, to help the programmers get fruit quicker, and thus, the rewards will be more economically motivating for more people.

Not so many men like us can think of long term rewards as motivating factors.

In fact, I hope that the majority of my reward does not come in this lifetime, as this part of life is very short, compared to eternity.

Jason,

Writing and computer programming are different in a very crucial way-- the latter can only be read by about 3 people (average # of developers of open source code base uploaded to GitHub or the like).

It takes, even an expert such as myself, days of dedication to read another person's code, load it all up in my head, and eventually comprehend it. And the lesser programmers will be even more discouraged, and they are 1/10 of 1% of the world's population. 99.9% percent are unable to read the code.

So the fundamental difference is that writing speaks directly to the user and thus can build/profit off derivative upsell effects.

As loneranger said, programming sucks because for most (average, who don't get in early on the rare lucky startup that goes IPO) programmers, they slave away, but 99% of the fruits go to the large corporation which controls the access to the users. Because the users don't see your modules of code, they only see the final product, and not as code, but as a working appliance.

What I am trying to do is fix the integration economy-of-scale problem, so that the fruits go more proportionally to the programmers. I am aiming to expand their fruits orders-of-magnitude.

The reason copyright is needed, is because since the end users don't read the code, then code theft completely hides any upsell benefit to the original code author. If a programmer spends weeks writing, customer supporting, & debugging some 1000 lines of code (average is 30 lines per day, debugged), then someone can steal his work in seconds.

I am not proposing to enforce copyright. I have said that up to 1000 end users (per program created), the code should be free. So people can freely copy, but if they get into business level volumes, then they need to pay. If programmers don't get paid for their independent work, then they have to go work as slaves for large corporations who take 99% of the fruit. God does not want that system, as it is retarding progress on spreading knowledge.

There is no restriction adding to existing work and incorporating it in new work. The only copyright restriction I proposed, is they must not give away in business volumes, that which is not free. In other words, the big corporations are not allowed to enslave knowledge.

No enforcement will ever be necessary. Corporations don't want to violate copyright, as they lose face in the development community.

I may never need to take a royalty, I may just end up selling my code in the system, and charging perhaps a user subscription fee for access to the system. For sure I won't take more than 10% of the increase in fruits I help create, which is what the Bible says to do.

P.S. Tangentially, one of the problems the Copute language hopes to solve, it to isolate understanding of code to smaller orthogonal modules, to facilitate the efficiency of gaining understanding of code. This orthogonality (referential transparency) also aids in reuse.

Shelby wrote:I am not proposing to enforce copyright. I have said that up to 1000 end users (per program created), the code should be free. So people can freely copy, but if they get into business level volumes, then they need to pay. If programmers don't get paid for their independent work, then they have to go work as slaves for large corporations who take 99% of the fruit. God does not want that system, as it is retarding progress on spreading knowledge.

There is no restriction adding to existing work and incorporating it in new work. The only copyright restriction I proposed, is they must not give away in business volumes, that which is not free. In other words, the big corporations are not allowed to enslave knowledge.

No enforcement will ever be necessary. Corporations don't want to violate copyright, as they lose face in the development community.

Let me clarify. I mean that any person would be able to go create a derivative work, and they would not be liable to pay anything, as long as they distribute that derivative work on Copute.com or to less than 1000 end users outside of Copute.com. They could even create multiple derivative works, and each one would have a 1000 threshold. And multiple people could each create their own derivative works, and each one would have the proposed 1000 threshold. So the point is that the threshold will never be triggered, except for a successful derivative work that is very popular (and thus assumed to generate business revenue, since popularity implies income due to for example Google ad revenue).

So the point is the copyright won't need to be enforced, because those entities that would exceed the derivative work distribution threshold, would also have a strong incentive not to cheat and steal, because they are earning a lot more from the code, than they are spending. And to cut off their lifeblood of code coming from Copute.com and the developer community, would be shooting themself in the foot.

The copyright only exists as a formal way of codifying that society will punish theft. But the actual enforcement is self-enforcement, because the implications of violation are such that cooperation is more attractive economically.

Last edited by Shelby on Wed May 25, 2011 6:59 am; edited 3 times in total

additional point: computer programming is the inverse of manufacturing

additional point: computer programming is the inverse of manufacturing

In the manufacture of goods, the design is replicated over and over again, so if 1 million products are produced and the cost of the design was 100 times the cost to produce each unit, then the design becomes 1/10,000 of the labor input. So this is why patents don't make any sense.

In computer programming, the replication costs nothing, thus the cost of the labor of design is never a small proportion of the cost. In fact, this is why open source trumps closed source, because the cost of labor of design is such a huge proportion of the business model, that 1000s of people sharing effort of design can not be matched by a closed source model.

And this is why taking programming for free is theft and violates the 10 Commandments. Rather it is appropriate to share the cost of the labor of design with all the other users, so the cost is amortized and reduced per unit.

P.S. I hope you are getting a clue that computer programming is very special phenomenon that has no other analog in our human existence. This is why I am becoming so adamant that Copute must be completed.

In computer programming, the replication costs nothing, thus the cost of the labor of design is never a small proportion of the cost. In fact, this is why open source trumps closed source, because the cost of labor of design is such a huge proportion of the business model, that 1000s of people sharing effort of design can not be matched by a closed source model.

And this is why taking programming for free is theft and violates the 10 Commandments. Rather it is appropriate to share the cost of the labor of design with all the other users, so the cost is amortized and reduced per unit.

P.S. I hope you are getting a clue that computer programming is very special phenomenon that has no other analog in our human existence. This is why I am becoming so adamant that Copute must be completed.

What is proposed to go on the Copute.com homepage

What is proposed to go on the Copute.com homepage

http://copute.com

Copute - “massively cooperative software progress”

Copute is two projects under development:

* an open source new computer language to optimize reuse of programming code

* a for-profit repository for a more powerful economic model for open source

Imagine an individual programmer could be paid, from those distributing derivative works for-profit, simply by submitting generally reusable code modules to a repository. Imagine individual programmers could quickly create derivative works without the resources of a large corporation, due to the wide scale reusability of a new computer language model, coupled with such a repository. Imagine the orders-of-magnitude of increased economic activity in information technology that would be unleashed on the world.

Can you help? We are most in need of a software engineer who is (or thinks he/she can quickly become) a compiler expert, and wants to help complete the Copute language. A suitable compensation and incentive package can be discussed, including the option to work from your own home office from any country in the world. Do you have the desire to join the next Google at earliest stage? Can you help us change the world? Do you have this level of passion for your work? Please email us: shelby@coolpage.com

Here follows the economic theory motivating our desire.

Copute - “massively cooperative software progress”

Copute is two projects under development:

* an open source new computer language to optimize reuse of programming code

* a for-profit repository for a more powerful economic model for open source

Imagine an individual programmer could be paid, from those distributing derivative works for-profit, simply by submitting generally reusable code modules to a repository. Imagine individual programmers could quickly create derivative works without the resources of a large corporation, due to the wide scale reusability of a new computer language model, coupled with such a repository. Imagine the orders-of-magnitude of increased economic activity in information technology that would be unleashed on the world.

Can you help? We are most in need of a software engineer who is (or thinks he/she can quickly become) a compiler expert, and wants to help complete the Copute language. A suitable compensation and incentive package can be discussed, including the option to work from your own home office from any country in the world. Do you have the desire to join the next Google at earliest stage? Can you help us change the world? Do you have this level of passion for your work? Please email us: shelby@coolpage.com

Here follows the economic theory motivating our desire.

Open Source Lowers Cost, Increases Value

Closed source can not compete with open source, because the cost of programming is nearly the entire cost for a software company. For example, if the design of a manufactured item costs 1000 times the cost to manufacture 1 unit when a million units are produced, then the design cost is an insignificant 1/1000th of the total cost. The replication and distribution costs of software are approaching zero, thus the design (programming) cost is approaching the total cost. Thus, any company that is not sharing the programming cost with other companies, is resource constrained relative to those companies which are sharing their programming costs. Tangentially, this is why patents on software retard maximum social value obtained from programming investment.

For-profit software companies gain market leverage and market-fitness by diversifying their products and/or services from the shared code at the top level of integration.

With standardized open source license on code, corporations can spontaneously share the cost of programming investment, without the friction, delay, risk, uncertainty, and complexity of multiple, multifarious, bilateral, ad hoc sharing negotiations.

Increased code reuse (sharing) lowers the programming cost for corporations, enabling them to focus capital on their market-fitness integration expertise-- where they generate profits.

Project Granularity of Code Re-use Limits Sharing, Cost Savings, and Value

Open source has reduced the total programming cost to corporations and end users, but due to project-level granularity of code reuse (i.e. sharing), this reduction is not maximized, and costs have not decreased for smaller companies and individual programmers in those cases where they can not spontaneously build derivative code incorporating only parts of projects. Although there has been a boom in diversity and market-fitness of software products, it is not maximized, which is the manifestation of the lack of cost reduction in the aforementioned cases.

The scale of open source reuse is limited by general lack of fine-grained reuse of code inherent in the lack of referential transparency in existing software languages. Instead, we primarily have reuse of entire projects, e.g. multiple companies from the exchange economy sharing programming investment on Linux, Apache server, Firefox browser, etc..It is not easy to reuse sub-modules (i.e. parts) from these projects in other projects, due to the lack of forethought and referential transparency (i.e. too much "spaghetti code" littered with hidden inextricable, interdependencies) in existing software language and design. The granularity of code reuse dictates the scale of shared investment, because for example fewer companies would find an incentive to invest in an entire project than to invest in reuseable code modules within projects, where those modules could be used in a greater diversify of derivative software. Increase the granularity of code reuse by orders-of-magnitude via referential transparency in software languages, then the sharing of programming cost will also increase by orders-of-magnitude. With orders-of-magnitude lower programming cost, then smaller companies and individual programmers will be able to move to the top of the food chain of integration, and there will be orders-of-magnitude faster rate of progress and diversity of market-fitness in software.

Increased Granularity of Re-use Will Transition from Reputation to Exchange Economy

As granularity of reuse of programming code increases via new software languages that incorporate referential transparency paradigms, the economics of contribution will need to become more fungible. Currently the economics of open source is funded by large corporations which have clear strategic paybacks for the projects they choose to invest in. At the project level of granularity, strategic ramifications are reasonable to calculate. However, as granularity of reuse increases, the diversity of scenarios of reuse will increase exponentially or greater, so thus it will become impossible to calculate a payback for a specific contribution.

Although some open source proponents have postulated that the "gift culture"[1] drives contribution, as diversity of contribution increases into smaller parts (instead of project-level contribution), the value of reputation will plummet in areas that reward free contribution. First, it will become much less necessary to gain social status in order to get other people to agree to work with you, as contribution will become disconnected from project objectives and a leader's ability to make a project succeed. Instead, contributors will need another payback incentive. Second, since the economic inputs of large corporations will diminish in significance, the value of reputation in gaining income (a job) will diminish. Instead individual programmers and smaller companies will create their own jobs, by creating more derivative software, given the lower cost of reuse of smaller modules. So the incentive to reuse increases as cost of reuse is lowered via increased granularity of referential transparency, but what will motivate contribution if the value of reputation diminishes? The answer is the value of reciprocity. Those who want to reuse, will become willing to contribute, if others who want to reuse are willing to contribute. This is an exchange economy.

Although some argue that gifting contributions is driven by the incentive to have your contribution merged into the code of the derivative software you use[3], yet with a referentially transparent programming model and automated builds from a repository, customized builds may have no ongoing maintenance. Moreover, if a bug-fix or improvement can increase the value (as defined below) of the source contribution it is fixing even if the price of the source contribution is lowered by the price requested for the fix, then existing derivatives are not discouraged from adopting the fix. Thus, the owner of the source contribution could become the gate-keeper for merging fixes into derivative works, not the owner of the derivative work, which thus decentralizes the process as compared to the gift economic model currently employed for open source.

Studying history, there are no instances where the "gift culture" scaled to an optimal free market. Gift cultures exist only where inefficient social hierarchies are subsidized by abundance, e.g. open source currently funded via large corporate contributions driven by the abundant unmet end user demand for software. However, every year in China, 1 out of 6 million college graduates can't find a job, and many more are underpaid and underutilized, the world is currently at a debt-saturation crossroads, and it is not clear how there will be sufficient abundance of jobs to employ the billions in the developing world. Exchange economies are how the free market optimally anneals best-fit and diversity in times of lack of the subsidy of abundance. Reputation is measured in fungible units, called money or in our case, more specifically the value of current active contributions.

The challenge is how to make the exchange of reusable small module contribution value fungible and efficient. The value of a module of code is determined by its exchange price and the quantity of derivative code that employs it. The price should be increased or decreased to maximize this value. The price is paid periodically in bulk by those distributing for-profit derivative software, not micro-payments. Typically the price would apply to a per installation (not per user) license, where upgrades (fixes and improvements) might be free to existing installations because the code is continually re-used in new derivative works that pay the price. Thus the proposed exchange economic model has an advantage over the gift model, in that upgrade costs are amortized over derivative software proliferation, instead of the service contract model.[4]

Copyright Codifies the Economic Model

So if for-profit derivative use of a contribution has a price, then contributing stolen code could destroy value. This is copyright, and is analgous in the gift (reputation) economy to the use of copyright in existing open source standard licenses to force that derivative works give credit the source contribution. In each economic model, copyright codifies the restrictions that make that model. Closed source is a command economy, free open source is a gift (reputation) economy, and priced open source is an exchange economy. Note since gift (reputation) economy relies on social status and abundance subsidy, it does not economically maximize spontaneous individual efforts, i.e. similarities to socialism.

- Code:

Economic Model Copyright Restriction

---------------------------------------

Command no derivatives, no copies

Gift no delisting the source

Exchange no selling of stolen source

Human language writing is mostly a gift (reputation) economy, because the writer gains upsell benefits as his ideas and reputation grow, even via those who re-distribute the writings without paying royalties. Programming is different from writing in a crucial aspect-- the end users never read the code and 99.9% of the population are not programmers[2] (and even amongst programmers, most do not read the code of every software they use). In the current hacker gift (reputation) economy model, programmers can gain value by building reputation amongst a smaller circle of peers who know their work well, and given the funding for contribution is coming from large corporations that have strategic interests met by the free sharing model, then prevention of theft adds no value. However, if granularity of reuse incentivizes a move towards more of an exchange economy model, then theft is parasite on value, as there is less upsell benefits when reputation is morphed from small circles of social hierarchy to wider scale exchange value, and so copyright will morph to codify that theft is not allowed. Note that value in the exchange economy is based on quantity of derivative reuse, thus it creates more value to incentivize free reuse where those derivatives will be reused in paid derivatives. Thus the differential pricing in exchange economics, i.e. free use for non-profit purposes or upstart business volumes, and volume discounts for-profit use.

There is not likely to be complete shift from reputation to exchange economics, but rather a blending of the two.

[1] Eric Raymond, Homesteading the Noosphere, The Hacker Milieu as Gift Culture, http://www.catb.org/~esr/writings/homesteading/homesteading/ar01s06.html

[2] http://stackoverflow.com/questions/453880/how-many-developers-are-there-in-the-world

[3] Eric Raymond, The Magic Cauldron, The Inverse Commons, http://www.catb.org/~esr/writings/cathedral-bazaar/magic-cauldron/ar01s05.html

[4] Eric Raymond, The Magic Cauldron, The Manufacturing Delusion, http://www.catb.org/~esr/writings/cathedral-bazaar/magic-cauldron/ar01s03.html

Made significant improvements to the economic theory

Made significant improvements to the economic theory

The section "Increased Granularity of Re-use Will Transition from Reputation to Exchange Economy" had been significantly improved, as I read and refuted Eric Raymond's arguments against an exchange economic model in his The Magic Cauldron.

Note I am not disagreeing with Mr. Raymond's other points about open source. I disagree with him only on the narrow point of gift (reputation) vs. exchange, as the correct model for maximizing progress in open source. And I don't entirely disagree with him about the value of the gift (reputation) model, as I state at the end of my theory page, that we are likely to see a blending of the two models.

I think the theory is extremely important. If you were an angel investor or software engineer who wants to seriously consider the Copute opportunity, you would want to know the detailed theory of why it is being done, before you leap with your capital or career.

Note I am not disagreeing with Mr. Raymond's other points about open source. I disagree with him only on the narrow point of gift (reputation) vs. exchange, as the correct model for maximizing progress in open source. And I don't entirely disagree with him about the value of the gift (reputation) model, as I state at the end of my theory page, that we are likely to see a blending of the two models.

I think the theory is extremely important. If you were an angel investor or software engineer who wants to seriously consider the Copute opportunity, you would want to know the detailed theory of why it is being done, before you leap with your capital or career.

SPOT

SPOT

Functional programming by itself is not enough to maximize modularity and composability.

So multi-paradigm languages attempt to mix the best of OOP with the best of FP:

http://c2.com/cgi/wiki?ObjectFunctional

Critical to this is SPOT (single-point-of-truth), which is the ability to localize specification in order to maximize modularity and composability (e.g. enables inversion-of-control or Hollywood principle).

Critical to this is Scala's unique algorithm for multiple inheritance. I improved this in a crucial way, by unconflating the Scala trait into an interface and mixin, and adding static methods. I found this to be necessary to maximize modularity and composability, when for example designing the monadic OOP code that I had sent you the link for in a prior email.

If you look at other multi-paradigm attempts, e.g. D Language, etc., you find they don't have this unique multiple inheritance, nor the improvements Copute makes to Scala.

What I also did with Copute was generalize purity (referential transparency) to the class model and closures.

So this is why Copute is a major advance over every language I have studied. If I could find Copute's features in an existing language, I would be very happy.

Some links:

http://en.wikipedia.org/wiki/Single_Point_of_Truth

http://en.wikipedia.org/wiki/Single_Source_of_Truth

http://en.wikipedia.org/wiki/Hollywood_principle

Constructors are considered harmful (<--- must read as it has the major points of this post)

So multi-paradigm languages attempt to mix the best of OOP with the best of FP:

http://c2.com/cgi/wiki?ObjectFunctional

Critical to this is SPOT (single-point-of-truth), which is the ability to localize specification in order to maximize modularity and composability (e.g. enables inversion-of-control or Hollywood principle).

Critical to this is Scala's unique algorithm for multiple inheritance. I improved this in a crucial way, by unconflating the Scala trait into an interface and mixin, and adding static methods. I found this to be necessary to maximize modularity and composability, when for example designing the monadic OOP code that I had sent you the link for in a prior email.

If you look at other multi-paradigm attempts, e.g. D Language, etc., you find they don't have this unique multiple inheritance, nor the improvements Copute makes to Scala.

What I also did with Copute was generalize purity (referential transparency) to the class model and closures.

So this is why Copute is a major advance over every language I have studied. If I could find Copute's features in an existing language, I would be very happy.

Some links:

http://en.wikipedia.org/wiki/Single_Point_of_Truth

http://en.wikipedia.org/wiki/Single_Source_of_Truth

http://en.wikipedia.org/wiki/Hollywood_principle

Constructors are considered harmful (<--- must read as it has the major points of this post)

Last edited by Shelby on Sat Nov 26, 2011 7:33 am; edited 3 times in total

Expression Problem

Expression Problem

Google it to know what it is.

Only statically typed languages can solve it. It can be solved with functional extension or OOP extension.

I discuss this and especially in Comment #2, I explain why SPOT is superior to ad-hoc polymorphism (duck typing):

http://code.google.com/p/copute/issues/detail?id=39

Only statically typed languages can solve it. It can be solved with functional extension or OOP extension.

I discuss this and especially in Comment #2, I explain why SPOT is superior to ad-hoc polymorphism (duck typing):

http://code.google.com/p/copute/issues/detail?id=39

Programming languages chart

Programming languages chart

http://www.pogofish.com/types.png

http://james-iry.blogspot.com/2010/05/types-la-chart.html

Copute will be on the "pure" row to right of Clean, in the "higher-kinded" column.

Note that chart does not illustrate whether nominal or structural typing is used and the aggressiveness of type inference.

http://james-iry.blogspot.com/2010/05/types-la-chart.html

Copute will be on the "pure" row to right of Clean, in the "higher-kinded" column.

Note that chart does not illustrate whether nominal or structural typing is used and the aggressiveness of type inference.

JavaCC LL(k) lexer and parser generator

JavaCC LL(k) lexer and parser generator

1. Copute's grammar requires the tracking of indenting around newlines, e.g. NLI means newline-indent:

http://copute.com/dev/docs/Copute/ref/grammar.txt

This is done so that Copute can fix some common human errors that occur around newlines, when semicolons ( ; ) are not required to terminate a line of code (as is the case in Copute and JavaScript).

JavaCC automatically generates a lexer, but I can see no way to tell it to track indenting to qualify the newline tokens sent to the parser:

http://www.cs.lmu.edu/~ray/notes/javacc/

http://www.engr.mun.ca/~theo/JavaCC-Tutorial/javacc-tutorial.pdf

http://javacc.java.net/doc/javaccgrm.html

2. JavaCC's .jj BNF specification syntax is too verbose (compared to Copute's grammar format). It might be possible to automatically convert Copute's more terse BNF format to a .jj file, so this issue could possibly be alleviated or mitigated.

3. JavaCC generates Java code and although I could probably interface Java and Scala code (since I want to write the rest of the compiler in Scala), this is fugly. I would like to use Scala (eventually bootstrapped to Copute) code, to make the code elegant and easy-to-read.

4. Looks like JavaCC generates non-optimal parse tables and parsing code.

5. JavaCC appears to be a monolithic (tangled spaghetti) design (apparently I can't even untangle the lexer and parser). I would prefer to build a parser generator that includes multiple composable modules, e.g. the generation of First(k) and Follow(k) sets should be orthogonal to a module that generates a parse table from those sets, and orthogonal yet again to a module that generates Scala code for the parser that integrates with the parse table, etc.. The point is I would like to have source code I can wrap my mind around.

6. I would like a tight coupling of the naming in the BNF file and the class names of the AST.

7. The documentation for JavaCC and JJTree seems overly complex. Surely we can simplify this.

8. The time we spend trying to shoehorn JavaCC, might be enough to code our own superior parser generator. I think the lexer for Copute needs to be hand-coded, not from a DFA generator.

> I tend to agree with you sometimes in the long run it is much much better

> to

> code it yourself than rely completely on a library that doesn't do exactly

> what you need, or is just too chaotic for you to ever fully understand

> then

> waste time trying to modify it. Those types of problems tend to get worse

> as the project progresses as well. I also place a very high value on

> fully

> understanding the code I use if I need to modify it, and the best way to

> do

> that is to write it yourself. I find personally I can very quickly

> decide

> which 3rd party libraries I like and which I don't after giving them some

> review, and it seems you've already decided you don't like JavaCC

Additional points:

A) One milestone test of a new language, is to write the compiler for that language in that language (a/k/a bootstrapping).

B) Writing the parser in more modular design, means not only we can understand it more readily, but so can others who might want to help contribute. Although JavaCC has a lot of contributors and users, they may not share our needs and focus, i.e. Java is far in principles, culture, features and focus from Copute and Scala:

http://copute.com/dev/docs/Copute/ref/intro.html#Java

C) Top of my head guess, I doubt the parser generator will take more time to code, than to figure out how to get the lexer we need work with JavaCC's integrated and apparently inseparable lexer.

=================================

http://members.cox.net/slkpg/

http://members.cox.net/slkpg/documentation.html#SLK_Quotations

http://copute.com/dev/docs/Copute/ref/grammar.txt

This is done so that Copute can fix some common human errors that occur around newlines, when semicolons ( ; ) are not required to terminate a line of code (as is the case in Copute and JavaScript).

JavaCC automatically generates a lexer, but I can see no way to tell it to track indenting to qualify the newline tokens sent to the parser:

http://www.cs.lmu.edu/~ray/notes/javacc/

http://www.engr.mun.ca/~theo/JavaCC-Tutorial/javacc-tutorial.pdf

http://javacc.java.net/doc/javaccgrm.html

2. JavaCC's .jj BNF specification syntax is too verbose (compared to Copute's grammar format). It might be possible to automatically convert Copute's more terse BNF format to a .jj file, so this issue could possibly be alleviated or mitigated.

3. JavaCC generates Java code and although I could probably interface Java and Scala code (since I want to write the rest of the compiler in Scala), this is fugly. I would like to use Scala (eventually bootstrapped to Copute) code, to make the code elegant and easy-to-read.

4. Looks like JavaCC generates non-optimal parse tables and parsing code.

5. JavaCC appears to be a monolithic (tangled spaghetti) design (apparently I can't even untangle the lexer and parser). I would prefer to build a parser generator that includes multiple composable modules, e.g. the generation of First(k) and Follow(k) sets should be orthogonal to a module that generates a parse table from those sets, and orthogonal yet again to a module that generates Scala code for the parser that integrates with the parse table, etc.. The point is I would like to have source code I can wrap my mind around.

6. I would like a tight coupling of the naming in the BNF file and the class names of the AST.

7. The documentation for JavaCC and JJTree seems overly complex. Surely we can simplify this.

8. The time we spend trying to shoehorn JavaCC, might be enough to code our own superior parser generator. I think the lexer for Copute needs to be hand-coded, not from a DFA generator.

> I tend to agree with you sometimes in the long run it is much much better

> to

> code it yourself than rely completely on a library that doesn't do exactly

> what you need, or is just too chaotic for you to ever fully understand

> then

> waste time trying to modify it. Those types of problems tend to get worse

> as the project progresses as well. I also place a very high value on

> fully

> understanding the code I use if I need to modify it, and the best way to

> do

> that is to write it yourself. I find personally I can very quickly

> decide

> which 3rd party libraries I like and which I don't after giving them some

> review, and it seems you've already decided you don't like JavaCC

Additional points:

A) One milestone test of a new language, is to write the compiler for that language in that language (a/k/a bootstrapping).

B) Writing the parser in more modular design, means not only we can understand it more readily, but so can others who might want to help contribute. Although JavaCC has a lot of contributors and users, they may not share our needs and focus, i.e. Java is far in principles, culture, features and focus from Copute and Scala:

http://copute.com/dev/docs/Copute/ref/intro.html#Java

C) Top of my head guess, I doubt the parser generator will take more time to code, than to figure out how to get the lexer we need work with JavaCC's integrated and apparently inseparable lexer.

=================================

http://members.cox.net/slkpg/

SLK currently supports the C, C++, Java and C# languages on all platforms. Note that the SLK parsers primarily consist of tables of integers as arrays and a very small parse routine that only uses the simple, and universally supported features of the language. This is in contrast to parser generators that produce recursive descent code. They must force a certain programming model on the user because the parsing code is intermixed with the user action code. SLK places no limits or requirements on the coding style of the user.

http://members.cox.net/slkpg/documentation.html#SLK_Quotations

Performance comment from Fischer & LeBlanc (1991), page 120:

Since the LL(1) driver uses a stack rather than recursive procedure calls to store symbols yet to be matched, the resulting parser can be expected to be smaller and faster than a corresponding recursive descent parser.

Last edited by Shelby on Wed Jun 15, 2011 5:24 am; edited 2 times in total

Downside of multiplicitous syntax

Downside of multiplicitous syntax

Having one standard way to write common things in a language, makes the code more readily readable (i.e. comprehensible) by a wider audience.

A programming language which requires mentally parsing multifarious syntactical formations increases the difficulty of becoming proficient at reading such a language.

For example Scala has numerous ways to write a method that inputs a String and returns its length as an Int:

And two ways to write methods that accept more than one argument (types are inferred in this example):

===============

Someone else recently mentioned this:

http://stackoverflow.com/questions/8303817/scala-9-ways-to-define-a-method

A programming language which requires mentally parsing multifarious syntactical formations increases the difficulty of becoming proficient at reading such a language.

For example Scala has numerous ways to write a method that inputs a String and returns its length as an Int:

- Code:

def len( s : String ) : Int = s.length

def len( s : String ) : Int { s.length }

def len( s : String ) : Int { return s.length }

val len : String => Int = s => s.length

val len = (s: String) => s.length

val len : String => Int = _.length

object len {

def apply( s : String ) : Int = s.length

}

And two ways to write methods that accept more than one argument (types are inferred in this example):

- Code: