Computers:

5 posters

Page 7 of 11

Page 7 of 11 •  1, 2, 3 ... 6, 7, 8, 9, 10, 11

1, 2, 3 ... 6, 7, 8, 9, 10, 11

additional point: computer programming is the inverse of manufacturing

additional point: computer programming is the inverse of manufacturing

In the manufacture of goods, the design is replicated over and over again, so if 1 million products are produced and the cost of the design was 100 times the cost to produce each unit, then the design becomes 1/10,000 of the labor input. So this is why patents don't make any sense.

In computer programming, the replication costs nothing, thus the cost of the labor of design is never a small proportion of the cost. In fact, this is why open source trumps closed source, because the cost of labor of design is such a huge proportion of the business model, that 1000s of people sharing effort of design can not be matched by a closed source model.

And this is why taking programming for free is theft and violates the 10 Commandments. Rather it is appropriate to share the cost of the labor of design with all the other users, so the cost is amortized and reduced per unit.

P.S. I hope you are getting a clue that computer programming is very special phenomenon that has no other analog in our human existence. This is why I am becoming so adamant that Copute must be completed.

In computer programming, the replication costs nothing, thus the cost of the labor of design is never a small proportion of the cost. In fact, this is why open source trumps closed source, because the cost of labor of design is such a huge proportion of the business model, that 1000s of people sharing effort of design can not be matched by a closed source model.

And this is why taking programming for free is theft and violates the 10 Commandments. Rather it is appropriate to share the cost of the labor of design with all the other users, so the cost is amortized and reduced per unit.

P.S. I hope you are getting a clue that computer programming is very special phenomenon that has no other analog in our human existence. This is why I am becoming so adamant that Copute must be completed.

What is proposed to go on the Copute.com homepage

What is proposed to go on the Copute.com homepage

http://copute.com

Copute - “massively cooperative software progress”

Copute is two projects under development:

* an open source new computer language to optimize reuse of programming code

* a for-profit repository for a more powerful economic model for open source

Imagine an individual programmer could be paid, from those distributing derivative works for-profit, simply by submitting generally reusable code modules to a repository. Imagine individual programmers could quickly create derivative works without the resources of a large corporation, due to the wide scale reusability of a new computer language model, coupled with such a repository. Imagine the orders-of-magnitude of increased economic activity in information technology that would be unleashed on the world.

Can you help? We are most in need of a software engineer who is (or thinks he/she can quickly become) a compiler expert, and wants to help complete the Copute language. A suitable compensation and incentive package can be discussed, including the option to work from your own home office from any country in the world. Do you have the desire to join the next Google at earliest stage? Can you help us change the world? Do you have this level of passion for your work? Please email us: shelby@coolpage.com

Here follows the economic theory motivating our desire.

Copute - “massively cooperative software progress”

Copute is two projects under development:

* an open source new computer language to optimize reuse of programming code

* a for-profit repository for a more powerful economic model for open source

Imagine an individual programmer could be paid, from those distributing derivative works for-profit, simply by submitting generally reusable code modules to a repository. Imagine individual programmers could quickly create derivative works without the resources of a large corporation, due to the wide scale reusability of a new computer language model, coupled with such a repository. Imagine the orders-of-magnitude of increased economic activity in information technology that would be unleashed on the world.

Can you help? We are most in need of a software engineer who is (or thinks he/she can quickly become) a compiler expert, and wants to help complete the Copute language. A suitable compensation and incentive package can be discussed, including the option to work from your own home office from any country in the world. Do you have the desire to join the next Google at earliest stage? Can you help us change the world? Do you have this level of passion for your work? Please email us: shelby@coolpage.com

Here follows the economic theory motivating our desire.

Open Source Lowers Cost, Increases Value

Closed source can not compete with open source, because the cost of programming is nearly the entire cost for a software company. For example, if the design of a manufactured item costs 1000 times the cost to manufacture 1 unit when a million units are produced, then the design cost is an insignificant 1/1000th of the total cost. The replication and distribution costs of software are approaching zero, thus the design (programming) cost is approaching the total cost. Thus, any company that is not sharing the programming cost with other companies, is resource constrained relative to those companies which are sharing their programming costs. Tangentially, this is why patents on software retard maximum social value obtained from programming investment.

For-profit software companies gain market leverage and market-fitness by diversifying their products and/or services from the shared code at the top level of integration.

With standardized open source license on code, corporations can spontaneously share the cost of programming investment, without the friction, delay, risk, uncertainty, and complexity of multiple, multifarious, bilateral, ad hoc sharing negotiations.

Increased code reuse (sharing) lowers the programming cost for corporations, enabling them to focus capital on their market-fitness integration expertise-- where they generate profits.

Project Granularity of Code Re-use Limits Sharing, Cost Savings, and Value

Open source has reduced the total programming cost to corporations and end users, but due to project-level granularity of code reuse (i.e. sharing), this reduction is not maximized, and costs have not decreased for smaller companies and individual programmers in those cases where they can not spontaneously build derivative code incorporating only parts of projects. Although there has been a boom in diversity and market-fitness of software products, it is not maximized, which is the manifestation of the lack of cost reduction in the aforementioned cases.

The scale of open source reuse is limited by general lack of fine-grained reuse of code inherent in the lack of referential transparency in existing software languages. Instead, we primarily have reuse of entire projects, e.g. multiple companies from the exchange economy sharing programming investment on Linux, Apache server, Firefox browser, etc..It is not easy to reuse sub-modules (i.e. parts) from these projects in other projects, due to the lack of forethought and referential transparency (i.e. too much "spaghetti code" littered with hidden inextricable, interdependencies) in existing software language and design. The granularity of code reuse dictates the scale of shared investment, because for example fewer companies would find an incentive to invest in an entire project than to invest in reuseable code modules within projects, where those modules could be used in a greater diversify of derivative software. Increase the granularity of code reuse by orders-of-magnitude via referential transparency in software languages, then the sharing of programming cost will also increase by orders-of-magnitude. With orders-of-magnitude lower programming cost, then smaller companies and individual programmers will be able to move to the top of the food chain of integration, and there will be orders-of-magnitude faster rate of progress and diversity of market-fitness in software.

Increased Granularity of Re-use Will Transition from Reputation to Exchange Economy

As granularity of reuse of programming code increases via new software languages that incorporate referential transparency paradigms, the economics of contribution will need to become more fungible. Currently the economics of open source is funded by large corporations which have clear strategic paybacks for the projects they choose to invest in. At the project level of granularity, strategic ramifications are reasonable to calculate. However, as granularity of reuse increases, the diversity of scenarios of reuse will increase exponentially or greater, so thus it will become impossible to calculate a payback for a specific contribution.

Although some open source proponents have postulated that the "gift culture"[1] drives contribution, as diversity of contribution increases into smaller parts (instead of project-level contribution), the value of reputation will plummet in areas that reward free contribution. First, it will become much less necessary to gain social status in order to get other people to agree to work with you, as contribution will become disconnected from project objectives and a leader's ability to make a project succeed. Instead, contributors will need another payback incentive. Second, since the economic inputs of large corporations will diminish in significance, the value of reputation in gaining income (a job) will diminish. Instead individual programmers and smaller companies will create their own jobs, by creating more derivative software, given the lower cost of reuse of smaller modules. So the incentive to reuse increases as cost of reuse is lowered via increased granularity of referential transparency, but what will motivate contribution if the value of reputation diminishes? The answer is the value of reciprocity. Those who want to reuse, will become willing to contribute, if others who want to reuse are willing to contribute. This is an exchange economy.

Although some argue that gifting contributions is driven by the incentive to have your contribution merged into the code of the derivative software you use[3], yet with a referentially transparent programming model and automated builds from a repository, customized builds may have no ongoing maintenance. Moreover, if a bug-fix or improvement can increase the value (as defined below) of the source contribution it is fixing even if the price of the source contribution is lowered by the price requested for the fix, then existing derivatives are not discouraged from adopting the fix. Thus, the owner of the source contribution could become the gate-keeper for merging fixes into derivative works, not the owner of the derivative work, which thus decentralizes the process as compared to the gift economic model currently employed for open source.

Studying history, there are no instances where the "gift culture" scaled to an optimal free market. Gift cultures exist only where inefficient social hierarchies are subsidized by abundance, e.g. open source currently funded via large corporate contributions driven by the abundant unmet end user demand for software. However, every year in China, 1 out of 6 million college graduates can't find a job, and many more are underpaid and underutilized, the world is currently at a debt-saturation crossroads, and it is not clear how there will be sufficient abundance of jobs to employ the billions in the developing world. Exchange economies are how the free market optimally anneals best-fit and diversity in times of lack of the subsidy of abundance. Reputation is measured in fungible units, called money or in our case, more specifically the value of current active contributions.

The challenge is how to make the exchange of reusable small module contribution value fungible and efficient. The value of a module of code is determined by its exchange price and the quantity of derivative code that employs it. The price should be increased or decreased to maximize this value. The price is paid periodically in bulk by those distributing for-profit derivative software, not micro-payments. Typically the price would apply to a per installation (not per user) license, where upgrades (fixes and improvements) might be free to existing installations because the code is continually re-used in new derivative works that pay the price. Thus the proposed exchange economic model has an advantage over the gift model, in that upgrade costs are amortized over derivative software proliferation, instead of the service contract model.[4]

Copyright Codifies the Economic Model

So if for-profit derivative use of a contribution has a price, then contributing stolen code could destroy value. This is copyright, and is analgous in the gift (reputation) economy to the use of copyright in existing open source standard licenses to force that derivative works give credit the source contribution. In each economic model, copyright codifies the restrictions that make that model. Closed source is a command economy, free open source is a gift (reputation) economy, and priced open source is an exchange economy. Note since gift (reputation) economy relies on social status and abundance subsidy, it does not economically maximize spontaneous individual efforts, i.e. similarities to socialism.

- Code:

Economic Model Copyright Restriction

---------------------------------------

Command no derivatives, no copies

Gift no delisting the source

Exchange no selling of stolen source

Human language writing is mostly a gift (reputation) economy, because the writer gains upsell benefits as his ideas and reputation grow, even via those who re-distribute the writings without paying royalties. Programming is different from writing in a crucial aspect-- the end users never read the code and 99.9% of the population are not programmers[2] (and even amongst programmers, most do not read the code of every software they use). In the current hacker gift (reputation) economy model, programmers can gain value by building reputation amongst a smaller circle of peers who know their work well, and given the funding for contribution is coming from large corporations that have strategic interests met by the free sharing model, then prevention of theft adds no value. However, if granularity of reuse incentivizes a move towards more of an exchange economy model, then theft is parasite on value, as there is less upsell benefits when reputation is morphed from small circles of social hierarchy to wider scale exchange value, and so copyright will morph to codify that theft is not allowed. Note that value in the exchange economy is based on quantity of derivative reuse, thus it creates more value to incentivize free reuse where those derivatives will be reused in paid derivatives. Thus the differential pricing in exchange economics, i.e. free use for non-profit purposes or upstart business volumes, and volume discounts for-profit use.

There is not likely to be complete shift from reputation to exchange economics, but rather a blending of the two.

[1] Eric Raymond, Homesteading the Noosphere, The Hacker Milieu as Gift Culture, http://www.catb.org/~esr/writings/homesteading/homesteading/ar01s06.html

[2] http://stackoverflow.com/questions/453880/how-many-developers-are-there-in-the-world

[3] Eric Raymond, The Magic Cauldron, The Inverse Commons, http://www.catb.org/~esr/writings/cathedral-bazaar/magic-cauldron/ar01s05.html

[4] Eric Raymond, The Magic Cauldron, The Manufacturing Delusion, http://www.catb.org/~esr/writings/cathedral-bazaar/magic-cauldron/ar01s03.html

Made significant improvements to the economic theory

Made significant improvements to the economic theory

The section "Increased Granularity of Re-use Will Transition from Reputation to Exchange Economy" had been significantly improved, as I read and refuted Eric Raymond's arguments against an exchange economic model in his The Magic Cauldron.

Note I am not disagreeing with Mr. Raymond's other points about open source. I disagree with him only on the narrow point of gift (reputation) vs. exchange, as the correct model for maximizing progress in open source. And I don't entirely disagree with him about the value of the gift (reputation) model, as I state at the end of my theory page, that we are likely to see a blending of the two models.

I think the theory is extremely important. If you were an angel investor or software engineer who wants to seriously consider the Copute opportunity, you would want to know the detailed theory of why it is being done, before you leap with your capital or career.

Note I am not disagreeing with Mr. Raymond's other points about open source. I disagree with him only on the narrow point of gift (reputation) vs. exchange, as the correct model for maximizing progress in open source. And I don't entirely disagree with him about the value of the gift (reputation) model, as I state at the end of my theory page, that we are likely to see a blending of the two models.

I think the theory is extremely important. If you were an angel investor or software engineer who wants to seriously consider the Copute opportunity, you would want to know the detailed theory of why it is being done, before you leap with your capital or career.

SPOT

SPOT

Functional programming by itself is not enough to maximize modularity and composability.

So multi-paradigm languages attempt to mix the best of OOP with the best of FP:

http://c2.com/cgi/wiki?ObjectFunctional

Critical to this is SPOT (single-point-of-truth), which is the ability to localize specification in order to maximize modularity and composability (e.g. enables inversion-of-control or Hollywood principle).

Critical to this is Scala's unique algorithm for multiple inheritance. I improved this in a crucial way, by unconflating the Scala trait into an interface and mixin, and adding static methods. I found this to be necessary to maximize modularity and composability, when for example designing the monadic OOP code that I had sent you the link for in a prior email.

If you look at other multi-paradigm attempts, e.g. D Language, etc., you find they don't have this unique multiple inheritance, nor the improvements Copute makes to Scala.

What I also did with Copute was generalize purity (referential transparency) to the class model and closures.

So this is why Copute is a major advance over every language I have studied. If I could find Copute's features in an existing language, I would be very happy.

Some links:

http://en.wikipedia.org/wiki/Single_Point_of_Truth

http://en.wikipedia.org/wiki/Single_Source_of_Truth

http://en.wikipedia.org/wiki/Hollywood_principle

Constructors are considered harmful (<--- must read as it has the major points of this post)

So multi-paradigm languages attempt to mix the best of OOP with the best of FP:

http://c2.com/cgi/wiki?ObjectFunctional

Critical to this is SPOT (single-point-of-truth), which is the ability to localize specification in order to maximize modularity and composability (e.g. enables inversion-of-control or Hollywood principle).

Critical to this is Scala's unique algorithm for multiple inheritance. I improved this in a crucial way, by unconflating the Scala trait into an interface and mixin, and adding static methods. I found this to be necessary to maximize modularity and composability, when for example designing the monadic OOP code that I had sent you the link for in a prior email.

If you look at other multi-paradigm attempts, e.g. D Language, etc., you find they don't have this unique multiple inheritance, nor the improvements Copute makes to Scala.

What I also did with Copute was generalize purity (referential transparency) to the class model and closures.

So this is why Copute is a major advance over every language I have studied. If I could find Copute's features in an existing language, I would be very happy.

Some links:

http://en.wikipedia.org/wiki/Single_Point_of_Truth

http://en.wikipedia.org/wiki/Single_Source_of_Truth

http://en.wikipedia.org/wiki/Hollywood_principle

Constructors are considered harmful (<--- must read as it has the major points of this post)

Last edited by Shelby on Sat Nov 26, 2011 7:33 am; edited 3 times in total

Expression Problem

Expression Problem

Google it to know what it is.

Only statically typed languages can solve it. It can be solved with functional extension or OOP extension.

I discuss this and especially in Comment #2, I explain why SPOT is superior to ad-hoc polymorphism (duck typing):

http://code.google.com/p/copute/issues/detail?id=39

Only statically typed languages can solve it. It can be solved with functional extension or OOP extension.

I discuss this and especially in Comment #2, I explain why SPOT is superior to ad-hoc polymorphism (duck typing):

http://code.google.com/p/copute/issues/detail?id=39

Programming languages chart

Programming languages chart

http://www.pogofish.com/types.png

http://james-iry.blogspot.com/2010/05/types-la-chart.html

Copute will be on the "pure" row to right of Clean, in the "higher-kinded" column.

Note that chart does not illustrate whether nominal or structural typing is used and the aggressiveness of type inference.

http://james-iry.blogspot.com/2010/05/types-la-chart.html

Copute will be on the "pure" row to right of Clean, in the "higher-kinded" column.

Note that chart does not illustrate whether nominal or structural typing is used and the aggressiveness of type inference.

JavaCC LL(k) lexer and parser generator

JavaCC LL(k) lexer and parser generator

1. Copute's grammar requires the tracking of indenting around newlines, e.g. NLI means newline-indent:

http://copute.com/dev/docs/Copute/ref/grammar.txt

This is done so that Copute can fix some common human errors that occur around newlines, when semicolons ( ; ) are not required to terminate a line of code (as is the case in Copute and JavaScript).

JavaCC automatically generates a lexer, but I can see no way to tell it to track indenting to qualify the newline tokens sent to the parser:

http://www.cs.lmu.edu/~ray/notes/javacc/

http://www.engr.mun.ca/~theo/JavaCC-Tutorial/javacc-tutorial.pdf

http://javacc.java.net/doc/javaccgrm.html

2. JavaCC's .jj BNF specification syntax is too verbose (compared to Copute's grammar format). It might be possible to automatically convert Copute's more terse BNF format to a .jj file, so this issue could possibly be alleviated or mitigated.

3. JavaCC generates Java code and although I could probably interface Java and Scala code (since I want to write the rest of the compiler in Scala), this is fugly. I would like to use Scala (eventually bootstrapped to Copute) code, to make the code elegant and easy-to-read.

4. Looks like JavaCC generates non-optimal parse tables and parsing code.

5. JavaCC appears to be a monolithic (tangled spaghetti) design (apparently I can't even untangle the lexer and parser). I would prefer to build a parser generator that includes multiple composable modules, e.g. the generation of First(k) and Follow(k) sets should be orthogonal to a module that generates a parse table from those sets, and orthogonal yet again to a module that generates Scala code for the parser that integrates with the parse table, etc.. The point is I would like to have source code I can wrap my mind around.

6. I would like a tight coupling of the naming in the BNF file and the class names of the AST.

7. The documentation for JavaCC and JJTree seems overly complex. Surely we can simplify this.

8. The time we spend trying to shoehorn JavaCC, might be enough to code our own superior parser generator. I think the lexer for Copute needs to be hand-coded, not from a DFA generator.

> I tend to agree with you sometimes in the long run it is much much better

> to

> code it yourself than rely completely on a library that doesn't do exactly

> what you need, or is just too chaotic for you to ever fully understand

> then

> waste time trying to modify it. Those types of problems tend to get worse

> as the project progresses as well. I also place a very high value on

> fully

> understanding the code I use if I need to modify it, and the best way to

> do

> that is to write it yourself. I find personally I can very quickly

> decide

> which 3rd party libraries I like and which I don't after giving them some

> review, and it seems you've already decided you don't like JavaCC

Additional points:

A) One milestone test of a new language, is to write the compiler for that language in that language (a/k/a bootstrapping).

B) Writing the parser in more modular design, means not only we can understand it more readily, but so can others who might want to help contribute. Although JavaCC has a lot of contributors and users, they may not share our needs and focus, i.e. Java is far in principles, culture, features and focus from Copute and Scala:

http://copute.com/dev/docs/Copute/ref/intro.html#Java

C) Top of my head guess, I doubt the parser generator will take more time to code, than to figure out how to get the lexer we need work with JavaCC's integrated and apparently inseparable lexer.

=================================

http://members.cox.net/slkpg/

http://members.cox.net/slkpg/documentation.html#SLK_Quotations

http://copute.com/dev/docs/Copute/ref/grammar.txt

This is done so that Copute can fix some common human errors that occur around newlines, when semicolons ( ; ) are not required to terminate a line of code (as is the case in Copute and JavaScript).

JavaCC automatically generates a lexer, but I can see no way to tell it to track indenting to qualify the newline tokens sent to the parser:

http://www.cs.lmu.edu/~ray/notes/javacc/

http://www.engr.mun.ca/~theo/JavaCC-Tutorial/javacc-tutorial.pdf

http://javacc.java.net/doc/javaccgrm.html

2. JavaCC's .jj BNF specification syntax is too verbose (compared to Copute's grammar format). It might be possible to automatically convert Copute's more terse BNF format to a .jj file, so this issue could possibly be alleviated or mitigated.

3. JavaCC generates Java code and although I could probably interface Java and Scala code (since I want to write the rest of the compiler in Scala), this is fugly. I would like to use Scala (eventually bootstrapped to Copute) code, to make the code elegant and easy-to-read.

4. Looks like JavaCC generates non-optimal parse tables and parsing code.

5. JavaCC appears to be a monolithic (tangled spaghetti) design (apparently I can't even untangle the lexer and parser). I would prefer to build a parser generator that includes multiple composable modules, e.g. the generation of First(k) and Follow(k) sets should be orthogonal to a module that generates a parse table from those sets, and orthogonal yet again to a module that generates Scala code for the parser that integrates with the parse table, etc.. The point is I would like to have source code I can wrap my mind around.

6. I would like a tight coupling of the naming in the BNF file and the class names of the AST.

7. The documentation for JavaCC and JJTree seems overly complex. Surely we can simplify this.

8. The time we spend trying to shoehorn JavaCC, might be enough to code our own superior parser generator. I think the lexer for Copute needs to be hand-coded, not from a DFA generator.

> I tend to agree with you sometimes in the long run it is much much better

> to

> code it yourself than rely completely on a library that doesn't do exactly

> what you need, or is just too chaotic for you to ever fully understand

> then

> waste time trying to modify it. Those types of problems tend to get worse

> as the project progresses as well. I also place a very high value on

> fully

> understanding the code I use if I need to modify it, and the best way to

> do

> that is to write it yourself. I find personally I can very quickly

> decide

> which 3rd party libraries I like and which I don't after giving them some

> review, and it seems you've already decided you don't like JavaCC

Additional points:

A) One milestone test of a new language, is to write the compiler for that language in that language (a/k/a bootstrapping).

B) Writing the parser in more modular design, means not only we can understand it more readily, but so can others who might want to help contribute. Although JavaCC has a lot of contributors and users, they may not share our needs and focus, i.e. Java is far in principles, culture, features and focus from Copute and Scala:

http://copute.com/dev/docs/Copute/ref/intro.html#Java

C) Top of my head guess, I doubt the parser generator will take more time to code, than to figure out how to get the lexer we need work with JavaCC's integrated and apparently inseparable lexer.

=================================

http://members.cox.net/slkpg/

SLK currently supports the C, C++, Java and C# languages on all platforms. Note that the SLK parsers primarily consist of tables of integers as arrays and a very small parse routine that only uses the simple, and universally supported features of the language. This is in contrast to parser generators that produce recursive descent code. They must force a certain programming model on the user because the parsing code is intermixed with the user action code. SLK places no limits or requirements on the coding style of the user.

http://members.cox.net/slkpg/documentation.html#SLK_Quotations

Performance comment from Fischer & LeBlanc (1991), page 120:

Since the LL(1) driver uses a stack rather than recursive procedure calls to store symbols yet to be matched, the resulting parser can be expected to be smaller and faster than a corresponding recursive descent parser.

Last edited by Shelby on Wed Jun 15, 2011 5:24 am; edited 2 times in total

Downside of multiplicitous syntax

Downside of multiplicitous syntax

Having one standard way to write common things in a language, makes the code more readily readable (i.e. comprehensible) by a wider audience.

A programming language which requires mentally parsing multifarious syntactical formations increases the difficulty of becoming proficient at reading such a language.

For example Scala has numerous ways to write a method that inputs a String and returns its length as an Int:

And two ways to write methods that accept more than one argument (types are inferred in this example):

===============

Someone else recently mentioned this:

http://stackoverflow.com/questions/8303817/scala-9-ways-to-define-a-method

A programming language which requires mentally parsing multifarious syntactical formations increases the difficulty of becoming proficient at reading such a language.

For example Scala has numerous ways to write a method that inputs a String and returns its length as an Int:

- Code:

def len( s : String ) : Int = s.length

def len( s : String ) : Int { s.length }

def len( s : String ) : Int { return s.length }

val len : String => Int = s => s.length

val len = (s: String) => s.length

val len : String => Int = _.length

object len {

def apply( s : String ) : Int = s.length

}

And two ways to write methods that accept more than one argument (types are inferred in this example):

- Code:

def f( arg1, arg2 ) = arg1 + arg2

def f( arg1 )( arg2 ) = arg1 + arg2

===============

Someone else recently mentioned this:

http://stackoverflow.com/questions/8303817/scala-9-ways-to-define-a-method

Last edited by Shelby on Thu Dec 01, 2011 7:52 pm; edited 2 times in total

FINISHED DRAFT: explanation of the technical rational for Copute

FINISHED DRAFT: explanation of the technical rational for Copute

Perhaps the most significant drag on programming productivity and correctness is the inability to fully comprehend all the dependencies and ramifications when interopting with some existing programming code. The severity probably increases exponentially with the size of the existing code.

The difficulty in comprehending existing code, is superficially manifested as lack of familiarity, multiple obscure syntax to accomplish same task, multiple coding styles, and multiple programming paradigms. These issues are amplified fundamentally when there is not a localization (a/k/a "separation") -of-concerns, i.e. that modules of code cannot be entirely understood in isolation. For example, if a module has ramifications on the overall state-machine that reach into other modules, then it is difficult to fully comprehend the single module, without understanding the other modules. The tentacles of cross-dependencies (a/k/a "spaghetti") proliferate into exponential states, thus exponentially increasing the time and complexity required to overcome the unfamiliarity of each module. Beyond some threshold of complexity of tangled dependencies, it becomes impractical to reason about how modules will interact (even for someone familiar with the existing code), thus composition of existing code is gambling (indeterminate).

The ability to extend existing code deterministically while minimizing comprehension overhead is the fundamental reason that programmers cannot interopt with maximum efficiency and thus software engineering follows a negative scaling law, known as The Mythical Man-Month:

http://en.wikipedia.org/wiki/The_Mythical_Man-Month#Ideas_presented

http://johntron.com/communication/the-cathedral-bazaar-mythical-man-and-bees/

The only known positive scaling law of software engineering is the brute-force style of open source where an excess pool of labor is thrown at the problem by removing the centralized control of reading code, but with centralized control over writing code to the official trunk:

http://www.catb.org/~esr/writings/cathedral-bazaar/cathedral-bazaar/ar01s05.html

http://www.catb.org/~esr/writings/cathedral-bazaar/linux1_d50_48kbs.mp3 (listen at 4:20)

The brute-force method does not address the fundamental problem of modularity of INDEPENDENT code extension (e.g. localization or separation-of-concerns), but rather provides for wider-scale reading participation while not removing the bottleneck of centralized control over extension and comprehension of large trunks of code.

Solving the fundamental problem of modularity of orthogonal code extension is likely to result in an exponential increase in software innovation, because the problem degrades and penalizes extension at an exponential rate, due to the exponential proliferation of tangled conflated states between modules.

This old problem was eventually named "The Expression Problem" by Philip Wadler in 1998, which basically says that "The goal is to define a datatype by cases, where one can add new cases to the datatype and new functions over the datatype, without recompiling existing code, and while retaining static type safety (e.g., no casts)".

One failed attempt to deal with this problem is the virtual method for an OOP class, which breaks the rules of the Liskov Substitution Principle. For example, if there is a class CSet which has an Add method, which by default adds a new member to the set, and if this is Add method is virtually overridden by a new subtype CBag, which only adds unique members, then a function which inputs a CSet does not expect Add to fail when the member is not unique. Another example is a CRectangle, which has a virtual method to set the length of a single side, but the subclass CSquare will set the length of both sides.

The problem with virtual methods is that there is a default implementation which is not substitutable for the overridden implementation. The reference to the default implementation expects it to be substitutable. Thus the solution is to use instead a referenceable interface, which specificies method signature(s), but provides no default implementation. When this interface is referenced (i.e. a function inputs an instance of the interface and calls a method of the interface), the referrer is not expecting a default implementation and thus there is no substitutability violation. Ideally the implementation (the class or mixin) is never referenced directly, rather only via an interface.

Thus the key to composability (i.e. localization/separation-of-concerns) is the granularity of composing interfaces, reusable implementations of interfaces (i.e. mixins), and the declaration that a method is referentially transparent (i.e. does not involve state). To achieve this granularity and localization it is necessary to have Scala's linearized multiple inheritance, a separation of Scala's trait into interface and mixin (where mixin can never be referenced, only used in inheritance), and adding static methods to interfaces to abstract instance construction, as discussed by Bracha:

https://goldwetrust.forumotion.com/t112p135-computers#4191

As far as I can see, the ability to mixin existing interfaces, is sufficient to solve the Expression Problem and it is not necessary to use other esoteric typing features offered by Scala:

http://www.scala-lang.org/docu/files/IC_TECH_REPORT_200433.pdf

There is no known computer language that adequately addresses the Expression Problem, because they lack some of the aforementioned requirements. For example, Haskell has referential transparency (but not optional, except via the obtuse State monad), and has type classes which are like interfaces:

http://en.wikibooks.org/wiki/Haskell/Class_declarations

But as far as I can see, Haskell is lacking linearized multiple inheritance and static methods in type classes. Apparently there is no subtyping of implementation in Haskell (there is inheritance of "class" which is like an interface), so inheritance of implementation is only ungrouped via the maximally granular per-function inclusion in the "where" of the "instance" declaration. Maximally granular is ideal as the upper limit of implementation mixin granularity, but not also as the lower limit. In Haskell there is no way to inherit groups of methods (a/k/a mixins), nor hierarchal structure. Also, Haskell type inference allows structural subtyping (a/k/a duck typing), where any function that does not declare the input class will match any class that has the invoked methods. Duck typing is thought to violate Expression Problem, because the pre-existing code may be operating on a type which is not a subtype of the intended type:

http://c2.com/cgi/wiki?NominativeAndStructuralTyping

O'Haskell claims to add subtyping, but it has not been studied to see if it provides linearized mixin inheritance.

The difficulty in comprehending existing code, is superficially manifested as lack of familiarity, multiple obscure syntax to accomplish same task, multiple coding styles, and multiple programming paradigms. These issues are amplified fundamentally when there is not a localization (a/k/a "separation") -of-concerns, i.e. that modules of code cannot be entirely understood in isolation. For example, if a module has ramifications on the overall state-machine that reach into other modules, then it is difficult to fully comprehend the single module, without understanding the other modules. The tentacles of cross-dependencies (a/k/a "spaghetti") proliferate into exponential states, thus exponentially increasing the time and complexity required to overcome the unfamiliarity of each module. Beyond some threshold of complexity of tangled dependencies, it becomes impractical to reason about how modules will interact (even for someone familiar with the existing code), thus composition of existing code is gambling (indeterminate).

The ability to extend existing code deterministically while minimizing comprehension overhead is the fundamental reason that programmers cannot interopt with maximum efficiency and thus software engineering follows a negative scaling law, known as The Mythical Man-Month:

http://en.wikipedia.org/wiki/The_Mythical_Man-Month#Ideas_presented

http://johntron.com/communication/the-cathedral-bazaar-mythical-man-and-bees/

The only known positive scaling law of software engineering is the brute-force style of open source where an excess pool of labor is thrown at the problem by removing the centralized control of reading code, but with centralized control over writing code to the official trunk:

http://www.catb.org/~esr/writings/cathedral-bazaar/cathedral-bazaar/ar01s05.html

http://www.catb.org/~esr/writings/cathedral-bazaar/linux1_d50_48kbs.mp3 (listen at 4:20)

The brute-force method does not address the fundamental problem of modularity of INDEPENDENT code extension (e.g. localization or separation-of-concerns), but rather provides for wider-scale reading participation while not removing the bottleneck of centralized control over extension and comprehension of large trunks of code.

Solving the fundamental problem of modularity of orthogonal code extension is likely to result in an exponential increase in software innovation, because the problem degrades and penalizes extension at an exponential rate, due to the exponential proliferation of tangled conflated states between modules.

This old problem was eventually named "The Expression Problem" by Philip Wadler in 1998, which basically says that "The goal is to define a datatype by cases, where one can add new cases to the datatype and new functions over the datatype, without recompiling existing code, and while retaining static type safety (e.g., no casts)".

One failed attempt to deal with this problem is the virtual method for an OOP class, which breaks the rules of the Liskov Substitution Principle. For example, if there is a class CSet which has an Add method, which by default adds a new member to the set, and if this is Add method is virtually overridden by a new subtype CBag, which only adds unique members, then a function which inputs a CSet does not expect Add to fail when the member is not unique. Another example is a CRectangle, which has a virtual method to set the length of a single side, but the subclass CSquare will set the length of both sides.

The problem with virtual methods is that there is a default implementation which is not substitutable for the overridden implementation. The reference to the default implementation expects it to be substitutable. Thus the solution is to use instead a referenceable interface, which specificies method signature(s), but provides no default implementation. When this interface is referenced (i.e. a function inputs an instance of the interface and calls a method of the interface), the referrer is not expecting a default implementation and thus there is no substitutability violation. Ideally the implementation (the class or mixin) is never referenced directly, rather only via an interface.

Thus the key to composability (i.e. localization/separation-of-concerns) is the granularity of composing interfaces, reusable implementations of interfaces (i.e. mixins), and the declaration that a method is referentially transparent (i.e. does not involve state). To achieve this granularity and localization it is necessary to have Scala's linearized multiple inheritance, a separation of Scala's trait into interface and mixin (where mixin can never be referenced, only used in inheritance), and adding static methods to interfaces to abstract instance construction, as discussed by Bracha:

https://goldwetrust.forumotion.com/t112p135-computers#4191

As far as I can see, the ability to mixin existing interfaces, is sufficient to solve the Expression Problem and it is not necessary to use other esoteric typing features offered by Scala:

http://www.scala-lang.org/docu/files/IC_TECH_REPORT_200433.pdf

There is no known computer language that adequately addresses the Expression Problem, because they lack some of the aforementioned requirements. For example, Haskell has referential transparency (but not optional, except via the obtuse State monad), and has type classes which are like interfaces:

http://en.wikibooks.org/wiki/Haskell/Class_declarations

But as far as I can see, Haskell is lacking linearized multiple inheritance and static methods in type classes. Apparently there is no subtyping of implementation in Haskell (there is inheritance of "class" which is like an interface), so inheritance of implementation is only ungrouped via the maximally granular per-function inclusion in the "where" of the "instance" declaration. Maximally granular is ideal as the upper limit of implementation mixin granularity, but not also as the lower limit. In Haskell there is no way to inherit groups of methods (a/k/a mixins), nor hierarchal structure. Also, Haskell type inference allows structural subtyping (a/k/a duck typing), where any function that does not declare the input class will match any class that has the invoked methods. Duck typing is thought to violate Expression Problem, because the pre-existing code may be operating on a type which is not a subtype of the intended type:

http://c2.com/cgi/wiki?NominativeAndStructuralTyping

O'Haskell claims to add subtyping, but it has not been studied to see if it provides linearized mixin inheritance.

Higher Kinded type abstraction (a/k/a generics or polymorphism)

Higher Kinded type abstraction (a/k/a generics or polymorphism)

Higher Kinded types are actually quite easy to understand.

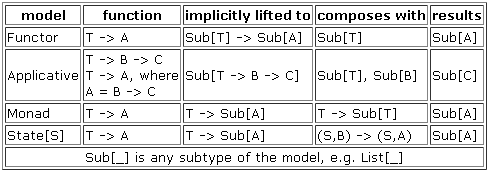

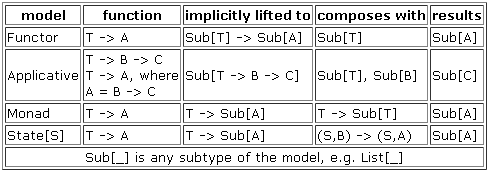

See the illustrations for section 5, on pages 6 and 7:

http://wiki.ifs.hsr.ch/SemProgAnTr/files/HigherKindGenericsInScala.pdf

Concrete (a/k/a "proper") types (e.g. Int, List[Int]) can be instantiated (a/k/a "value"). Abstract types (e.g. List[T], Pair[U, V]) are type constructors, i.e. they can construct a concrete type when their type parameters (e.g. T, U, V) are specified.

A higher kinded type system allows the type parameter for an abstract type to be an abstract type, instead of requiring it be a concrete type. Without higher-kinded typing we can do:

Or:

Neither of the above enforce (at compile time, i.e. static typing) the return type of filter for subtypes of Iterable.

Whereas with higher-kinded types we can keep the SPOT (single-point-of-truth) in the base Iterable class:

Note higher-kinded typing can be performed with C++ templates, but this is fugly verbose and remember that templates are reified at compile-time, which afair has some drawbacks one of which is in terms of the Expression Problem.

http://stackoverflow.com/questions/2565097/higher-kinded-types-with-c

The creators of Scala published a paper on their higher-kinded typing:

http://adriaanm.github.com/files/higher.pdf

See the illustrations for section 5, on pages 6 and 7:

http://wiki.ifs.hsr.ch/SemProgAnTr/files/HigherKindGenericsInScala.pdf

- Code:

Value: 5 [1,2,3] abstract abstract abstract

Type: Int List[Int] List[T] Pair[U, V] Iterable[T, Sub[_]]

Kind: * * * -> * * -> * -> * * -> (* -> *) -> *

Concrete (a/k/a "proper") types (e.g. Int, List[Int]) can be instantiated (a/k/a "value"). Abstract types (e.g. List[T], Pair[U, V]) are type constructors, i.e. they can construct a concrete type when their type parameters (e.g. T, U, V) are specified.

A higher kinded type system allows the type parameter for an abstract type to be an abstract type, instead of requiring it be a concrete type. Without higher-kinded typing we can do:

- Code:

class Iterable[T, Sub]

{

filter : T -> Bool -> Sub

}

class ListInt inherits Iterable[Int, ListInt] {}

Or:

- Code:

class Iterable[T]

{

filter : T -> Bool -> Iterable[T]

}

class List[T] inherits Iterable[T]

{

filter : T -> Bool -> List[T] // an override

}

Neither of the above enforce (at compile time, i.e. static typing) the return type of filter for subtypes of Iterable.

Whereas with higher-kinded types we can keep the SPOT (single-point-of-truth) in the base Iterable class:

- Code:

class Iterable[T, Sub[_]]

{

filter : T -> Bool -> Sub[T]

}

class List[T] inherits Iterable[T, List] {}

Note higher-kinded typing can be performed with C++ templates, but this is fugly verbose and remember that templates are reified at compile-time, which afair has some drawbacks one of which is in terms of the Expression Problem.

http://stackoverflow.com/questions/2565097/higher-kinded-types-with-c

The creators of Scala published a paper on their higher-kinded typing:

http://adriaanm.github.com/files/higher.pdf

Last edited by Shelby on Thu Sep 08, 2011 12:05 am; edited 2 times in total

simplifying the Copute grammar

simplifying the Copute grammar

In the attached file, I am overhauling the LL(k) SLK parser grammar that originally appeared here:

http://copute.com/dev/docs/Copute/ref/grammar.txt

http://members.cox.net/slkpg/

Note the attached incomplete grammar is already LL(5), i.e. 5 tokens of lookahead, while the prior grammar was LL(3).

I have significantly increased the documentation in the grammar.

One of the first goals has been to eliminate the sort of multiplicitous syntax readability problem with Scala:

https://goldwetrust.forumotion.com/t112p165-computers#4420

So now in Copute, there is only one way to declare a function which is an expression that can be used where ever a function of the correct type is expected:

And only way way to declare a reference to a function (i.e. for a method), and the type of the function is always declared by what the function is assigned to (imagine also passing the function as an argument to another function), e.g.:

When declared within the body of an interface, mixin, or class, the above is a method (i.e. a function that inputs 'this' in an undeclared first argument). To declare as function that does not input 'this', then the read+write properties should be declared (note constructor arguments are always (public,const), but can be obscured by assigning to a property with same identifier), e.g.:

A method can be declared private:

So thus the interface to a method is just the first part:

And the inherited implementation in class or mixin does not need to restate the function type:

Note the optional default arguments and lazy evaluation declaration is specified where the function is, but the function interface declaration can be separated from the function body, thus in the interface:

And for the implementation in the mixin or class is not required to repeat it:

My other goals are to make type inference more local and consistent enforcement of where type should/must be specified or not. For example, type can not be specified in the function itself, which I think makes reading a function too dense any way:

\s : Int, pos : Int -> s.substring( pos ).length \\ syntax error

For the default case. it is no longer necessary to prepend with 'var' or 'cref', as it is in Scala (e.g. 'def'), so a lower verbosity syntax.

I am also trying to convert some statements (e.g. switch) into expressions to make the syntax more bijective (compatible in both directions) with Scala, since everything is an expression in Scala and there are no statements.

> That's good. I've long been a fan of "one and only one way to do things".

> In fact, I've suggested Scala delete methods and just require methods be

> defined as "val mult = (x: Int, y: Int) => x * y".

Your proposal without the return type inferred would be:

Copute method is declared:

I prefer the type of the instance reference 'mult' to be unconflated from the rvalue declaration. It is crowded to read the arguments (x and y) with the type noise, especially when you add call-by-name (lazy evaluation) and default values annotations to arguments. Also thus non-function types are unified with function types in terms of where the type declaration appears in an instance reference declaration. I prefer -> instead of Scala's => for the function constructor, because the latter looks too much like say +=, and it often appears close to an assignment =, thus making it look like = ... = ..., and in general I don't like overloading the meaning of '=' to mean something other than assignment. Also -> is whitespace friendly and is consistent with Haskell and other languages and I think also some lamba calculus notations.

Copute non-method member which is a function type is differentiated from a method by the explicit declaration of its getter and setter permissions:

http://copute.com/dev/docs/Copute/ref/grammar.txt

http://members.cox.net/slkpg/

Note the attached incomplete grammar is already LL(5), i.e. 5 tokens of lookahead, while the prior grammar was LL(3).

I have significantly increased the documentation in the grammar.

One of the first goals has been to eliminate the sort of multiplicitous syntax readability problem with Scala:

https://goldwetrust.forumotion.com/t112p165-computers#4420

So now in Copute, there is only one way to declare a function which is an expression that can be used where ever a function of the correct type is expected:

- Code:

\s -> s.length

And only way way to declare a reference to a function (i.e. for a method), and the type of the function is always declared by what the function is assigned to (imagine also passing the function as an argument to another function), e.g.:

- Code:

len : Int -> Int = \s -> s.length

When declared within the body of an interface, mixin, or class, the above is a method (i.e. a function that inputs 'this' in an undeclared first argument). To declare as function that does not input 'this', then the read+write properties should be declared (note constructor arguments are always (public,const), but can be obscured by assigning to a property with same identifier), e.g.:

- Code:

len (public,public) : Int -> Int = \s -> s.length

A method can be declared private:

- Code:

private len : Int -> Int = \s -> s.length // as in Scala, private may be qualified with a package name, e.g. private[name]

So thus the interface to a method is just the first part:

- Code:

len : Int -> Int

And the inherited implementation in class or mixin does not need to restate the function type:

- Code:

len = \s -> s.length

Note the optional default arguments and lazy evaluation declaration is specified where the function is, but the function interface declaration can be separated from the function body, thus in the interface:

- Code:

len : Int -> Int -> Int = \s, &pos = 0

And for the implementation in the mixin or class is not required to repeat it:

- Code:

len = \s, pos -> s.substring( pos ).length

My other goals are to make type inference more local and consistent enforcement of where type should/must be specified or not. For example, type can not be specified in the function itself, which I think makes reading a function too dense any way:

\s : Int, pos : Int -> s.substring( pos ).length \\ syntax error

For the default case. it is no longer necessary to prepend with 'var' or 'cref', as it is in Scala (e.g. 'def'), so a lower verbosity syntax.

I am also trying to convert some statements (e.g. switch) into expressions to make the syntax more bijective (compatible in both directions) with Scala, since everything is an expression in Scala and there are no statements.

> That's good. I've long been a fan of "one and only one way to do things".

> In fact, I've suggested Scala delete methods and just require methods be

> defined as "val mult = (x: Int, y: Int) => x * y".

Your proposal without the return type inferred would be:

- Code:

val mult = (x: Int, y: Int): Int => x * y

Copute method is declared:

- Code:

mult : Int -> Int -> Int = \x, y -> x * y

I prefer the type of the instance reference 'mult' to be unconflated from the rvalue declaration. It is crowded to read the arguments (x and y) with the type noise, especially when you add call-by-name (lazy evaluation) and default values annotations to arguments. Also thus non-function types are unified with function types in terms of where the type declaration appears in an instance reference declaration. I prefer -> instead of Scala's => for the function constructor, because the latter looks too much like say +=, and it often appears close to an assignment =, thus making it look like = ... = ..., and in general I don't like overloading the meaning of '=' to mean something other than assignment. Also -> is whitespace friendly and is consistent with Haskell and other languages and I think also some lamba calculus notations.

Copute non-method member which is a function type is differentiated from a method by the explicit declaration of its getter and setter permissions:

- Code:

mult (public, public) : Int -> Int -> Int = \x, y -> x * y

- Code:

sources:

{ source }+

source:

class

reference

expression

ifElse // TODO: move this to 'primary' and change 'then' to use 'exprSansIfelse' instead of 'expression', and add 'else' to that line

scope:

'{' [ NL ] sources [ NL ] '}'

reference:

[ refType ] ID typeInit // without refType, defaults to 'cref' for pass-by-reference, and 'var' for pass-by-value, instances

refType ID init // init without type is only allowed if prepended with a refType, because otherwise ambiguous with with an assignment expression, e.g. no way to obscure a reference of same name in outer scope or assignment expression reference name typo silently accepted as construction of new reference

refType:

'const' // the instance can not be modified, regardless if it is pass-by-reference or pass-by-value

'ref' // the reference can be modified to point to a different instance, applies only to pass-by-reference instances

'const' 'ref' // combines the above meanings, note the reference is not constant.

'var' // 'const' 'var' is not allowed because has same meaning as 'const'

'cref' // 'const' 'cref' is not allowed because has same meaning as 'const'

typeInit:

: nfType [ [ NL ] typeInit2 ] // init is optional if cType has a default constructor

tArgList [ NL ] ':' fType [ NL ] '=' [ NL ] function

//init when refType is not specified, this is ambiguous with an assignment expression. Disambiguate from assignment when ID is not already declared. Note this means a typo on ID will silently fail assignment and create a reference that is never accessed (so maybe we can detect on compilation and error). To hide an ID of same name in outer scope, then refType must be specified. If we did not allow assignment to declare new references, then mixin inheritance of an interface would require verbose 'cref' prepended to method implementations (due to the type being already specified in the interface).

init:

'=' [ NL ] rightExpr

typeInit2:

init

nextType [ NL ] '=' [ NL ] function

nfType:

cType // include standard classes for the builtin types, Int, Float, String, Bool

fType:

nfType [ NL ] nextType

fType2

fType2:

'(' fType ')' [ NL ] nextType

'void' [ NL ] '->' [ NL ] xtype // 'void' not an instance reference type, thus can not be a class, only allowed as a single argument or return type

xtype:

nfType

'(' fType ')'

type:

nfType [ [ NL ] nextType ]

fType2

nextType:

'->' [ NL ] xtype [ [ NL ] nextType ]

'->' [ NL ] 'void' // 'void' not an instance reference type, thus can not be a class, only allowed as a single argument or return type

function:

fDecl [ NL ] fBody

fDecl:

[ purity ] '\' [ fArgs ]

fArgs:

fArg [ [ NL ] ',' [ [ NL ] fArgs ] ]

fArg:

[ '&' ] ID [ init ] // the optional '&' is for pass-by-expression, i.e. lazy evaluation, i.e. argument expression is implicitly wrapped in a function call that returns the evaluated expression. Note not allowed for return type.

purity:

'impure'

'mutable'

fBody:

'->' [ NL ] rightExpr

scope

expression:

assign

rightExpr

assign:

leftExpr [ NL ] assignop [ NLI ] rightExpr

leftExpr:

ID { [ NL ] memberRef } // only a named reference, or its class properties, may be assigned to. This simplifies our grammar significantly. Since we are targetting pure programming, we discourage the assignment to a reference which is an unsaved return value-- because in pure programming we can only return a new instance and it thus has not been saved any where, thus would only be useful for impure, side-effect programming. Such impure programming can still be done (save a return value to a reference before assigning to it). Note, a method which inputs a 'void' can be called without '()', so semantic analysis should not allow assign to return value of such, nor its class properties.

//conflicts with call below: '(' assign ')' { [ NL ] memberRef }+ // an assign has saved a reference in an ID, so we can assign to its class properties.

memberRef:

'.' [ NLI ] ID // no [ NL ] after the operator, http://code.google.com/p/copute/issues/detail?id=31

rightExpr:

boolOr [ [ NL ] '?' [ NLI ] assign [ NL ] ':' [ NLI ] assign ] // aka 'ternary' operator

boolOr:

boolAnd { [ NL ] '||' [ NLI ] boolAnd }

boolAnd:

bitOr { [ NL ] '&&' [ NLI ] bitOr }

bitOr:

bitXor { [ NL ] '|' [ NLI ] bitXor }

bitXor:

bitAnd { [ NL ] '^' [ NLI ] bitAnd }

bitAnd:

equality { [ NL ] '&' [ NLI ] equality }

equality:

compare { [ NL ] equalityop [ NLI ] compare }

compare:

extrapolate { [ NL ] compareop [ NLI ] shift }

shift:

concat { [ NL ] shiftop [ NLI ] concat }

concat:

add { [ NL ] '#' [ NLI ] add }

add:

multiply { addop [ NLI ] multiply } // see NLPLUS and NLMINUS, we can't put a NL in front of addop, because NL also separates expr, and unary contains an addop on its left-side in prefixop

multiply:

unary { [ NL ] multiplyop [ NLI ] unary }

unary:

[ prefixop ] [ postfixop ] instance [ postfixop ]

instance:

primary { [ NL ] memberRef } [ callExpr ] // Semantic analysis must determine if 'memberRef' and/or 'callExpr' is allowed on 'primary'. Also, a function call might not return an instance (i.e. 'void') for an impure function that has side-effects.

'new' [ NL ] cDecl call

primary:

ID

'(' [ NL ] expression [ NL ] ')'

'(' [ NL ] function [ NL ] ')'

switch

callExpr:

call { callSuffix }

callSuffix:

call

[ NL ] memberRef

call:

'(' [ NL ] fParams [ NL ] ')'

fParams:

expression [ [ NL ] ',' [ [ NL ] fParams ] ]

ifElse:

'if' '(' rightExpr ')' then

then:

[ NLI ] expression // use NLI instead of NL, http://code.google.com/p/copute/issues/detail?id=28

[ NL ] scope [ [ NL ] 'else' block ] // if there is an 'else', force braces on the 'if', http://code.google.com/p/copute/issues/detail?id=21#c2

block:

[ NLI ] expression // use NLI instead of NL, http://code.google.com/p/copute/issues/detail?id=28

scope

switch:

'switch' '(' [ NL ] expression [ NL ] ')' [ NL ] '{' [ NL ] { case } [ default ] '}' // We force the '(' and ')' because, http://code.google.com/p/copute/issues/detail?id=30&can=1

case:

'case' rightExpr ':' [ NL ] sourcesOrEmpty [ NL ] // allow empty case ';', so that user can do noop for specific cases without a default, to make sure all cases are coded

sourcesOrEmpty:

sources

';'

default:

'default' ':' [ NL ] sourcesOrEmpty [ NL ]

class:

genre ID [ [ NL ] tArgList ] [ NL ] cDecl // if INTERFACE, then ID must begin with "I[A-Z]", else "[A-HJ-Z][^A-Z]", http://copute.com/dev/docs/Copute/ref/class.html#Inheritance

anonyClass:

genre [ [ NL ] funcArgs ] [ NL ] cDecl

genre:

'case'

'class'

'mixin'

'interface'

tArgList:

'<' tArgs '>'

tArgs:

[ NL ] tArg [ [ NL ] ',' [ tArgs ] ]

tArg:

[ variance ] ID [ tArgList ] [ tConstrain ] // the 'tArgList' is for a higher-kinded type parameter

variance:

'+'

'-'

tConstrain:

subTypeOf [ NL ] type [ superTypeOf [ NL ] type ]

superTypeOf [ NL ] type

subTypeOf:

':'

superTypeOf:

'<:'

cDecl:

[ inherit [ NL ] ] [ constructor [ NL ] ] cBody // semantic analysis must not allow 'constructor' for 'interface' and 'mixin'

inherit:

'inherits' cTypes

constructor:

[ 'private' [ NL ] ] fDecl

cTypes:

[ NL ] cType [ [ NL ] ',' [ cTypes ] ]

cType:

ID [ tParamList ] // ID is class

anonyClass

tParamList:

'<' types [ NL ] '>'

cBody:

'{' [ [ NL ] cMember { [ ',' ] [ NL ] cMember } ] [ NL ] '}'

cMember:

[ 'private' ] cMemberSuffix

cMemberSuffix:

cmBody

class // Note, this can be a CASE

cmBody:

[ 'static' ] ID [ etters ] [ [ NL ] typeInit ] // this can be a 'CASE ID', when 'genre' is 'interface', because no ID without 'etters' in 'interface'

'case' ID

etters:

'(' access ',' access ')' // The modifier that precedes ID must not be 'private'

access:

'public'

'new'

'private'

// Operator sets

prefixop:

addop // http://stackoverflow.com/questions/2624410/what-is-the-purpose-of-javas-unary-plus-operator

NLPLUS // http://code.google.com/p/copute/issues/detail?id=23, where this terminal will conflict with an NL or addop expected, otherwise process as PLUS...

NLMINUS // ...or MINUS

'!'

'~'

TYPEOF // can be used with type parametrization to fork functionality, although this is equivalent to overloading and/or using an case class, so I may deprecate this

postfixop:

'++'

'--'

multiplyop:

'*'

'/'

'%'

addop:

'+'

'-'

shiftop:

'<<'

'>>'

'>>>'

compareop:

// '<' // use MORE instead and flip operands, conflicts with sequence type ('tParamList')

'>'

'<='

'>='

equalityop:

'=='

'!='

=== // compare the instance data (or overloaded), same as EQUAL for pass-by-value types

!== // compare the instance data (or overloaded), same as NOTEQUAL for pass-by-value types

assignop:

'='

'&='

'|='

'^='

'*='

'\='

'%='

'+='

'-='

'<<='

'>>='

'>>>='

'#='

Last edited by Shelby on Thu Dec 01, 2011 7:52 pm; edited 13 times in total

World Without Web

World Without Web

I like the use of the word serendipity. Now imagine a future where Copute does not happen.

Al Gore didn't create the internet, hackers at DARPA did by lying to the government.

I have to cheer Eric S. Raymond on this one.

http://esr.ibiblio.org/?p=3331#comment-310506

http://esr.ibiblio.org/?p=3331#comment-310483

http://esr.ibiblio.org/?p=3335

http://esr.ibiblio.org/?p=3335#comment-310551

http://esr.ibiblio.org/?p=3335#comment-310626

http://esr.ibiblio.org/?p=3335#comment-310703

Al Gore didn't create the internet, hackers at DARPA did by lying to the government.

I have to cheer Eric S. Raymond on this one.

http://esr.ibiblio.org/?p=3331#comment-310506

Yes, it’s true. I was there [in 1983]. See “a roomful of hackers with an innate distrust of hierarchy and a strong allergy to system designs with single-point vulnerability”. Our distrust extended to systems vulnerable to political single-point failures as well as technical ones.

http://esr.ibiblio.org/?p=3331#comment-310483

Do you get it yet, Ms. Love? You rely on open-source software written by me and my peers for “day-to-day functionality” every day you touch the Internet or anything that talks to it. But you don’t see it, because we’ve done our job so well that our part of the infrastructure is almost invisible to you. You are free to make snotty remarks about our inability to meet user needs only because we have created for you the luxury of ignorance. And you are able to dismiss our concerns as hysteria only because more layers of ignorance lie between you and the long struggle we have waged against similar power grabs in the past

http://esr.ibiblio.org/?p=3335

World Without Web...

...I’m going to sketch an all-too-plausible alternate history in which the World Wide Web never happened.

The divergence point for this history is in 1983-1984, when the leadership of DARPA lied through its teeth to Congress about who was being allowed access to the Internet. The pretense being maintained was that only direct affiliates of government-sponsored, authorized research programs were being allowed on. DARPA knew very well this wasn’t true; they had a broader vision of where the Internet might lead, one that wouldn’t be realized for another ten years. They viewed the yeasty, chaotic culture of casual use by all manner of ‘randoms’ (unauthorized people including, at the time, me) as what would later be called a technology incubator – a vast Petri dish from which amazing serendipities would spring in due time...

http://esr.ibiblio.org/?p=3335#comment-310551

>Sounds like you like government after all.

Remember, I attribute the takeoff of the Internet to DARPA deliberately lying to its political masters. This happened precisely because DARPA’s leadership knew it was impossible within the incentives operating on governments for them to tolerate the ‘randoms’ or the results DARPA foresaw.

http://esr.ibiblio.org/?p=3335#comment-310626

>Further, like most advocates of the state who say “if there was no government who would build roads?”

If you think this what-if qualifies me as an “advocate of the state”, you’re out of your fucking mind.

I don’t know what evolutionary path would have gotten a free market in telecomms to an Internet. I do know that we didn’t get to run that experiment, because there was a government-created telecomms monopoly firmly in place. Even after the AT&T divestiture our infrastructure retained the stamp of that monopoly in ways both large and small. We are just lucky that DARPA subverted the normal centralizing tendency of government at a crucial time.

http://esr.ibiblio.org/?p=3335#comment-310703

>But I will point to the existance of the SF mailing list as evidence that, just as Eric says, the DARPA folks were lying about off-program uset of the Arpanet.

Heh. I’d bet that half the DARPA program monitors were on that list. Here, on information and belief, is how I think their reasoning went:

“This list is fun. It’s also an experiment in a novel mode of communication (most historians, including me, think it was the very first Internet mailing list). As such, it’s legitimately part of our research into the consequences of cheap, robust wide-area networking. And so is letting on all those college kids who don’t technically qualify under the program charter. But if we try to explain that at the Congressional hearings, we’re sure as shit going to get Proxmired.”

It was a reasonable fear. William Proxmire trashed a lot of immensely valuable research in the course of his political grandstanding. Once or twice he even apologized afterwards, but the damage had been done. I’ve had it pretty strongly hinted to me that certain of those responsible believed lying to Congress was less risky than telling the truth where Proxmire could get wind of it.

I suspect the Internet program managers were neither the first nor the last to make that choice. And be justified in making it.

Last edited by Shelby on Mon Jun 20, 2011 7:01 am; edited 2 times in total

Ahhh, State monad and Traversable come clear in my mind and my explanation

Ahhh, State monad and Traversable come clear in my mind and my explanation

Finally I have a very good understanding and explanation of State monad, Traversable, and everything I have been working on this file.

Also, I have generalized the Traversable over the state-of-the-art in published research.

I am very satisfied with my explanation and code for the State monad, as well as the examples I gave for using it with ITraversable. Try and try and I doubt you will find any equivalently concise layman example implementations of State monad that are not in the obtuse Haskell syntax. Finally I can say that a common person can fully understand the State monad (and Traversable, Applicative, etc) without pulling their hair out for days and weeks (as I and many others have done).

Also I have now a sentence that begins with "Conceptually," at the start of the documentation for each of these (IFunctor, IApplicative, IMonad, State, ITraversable), which fully explains the significance of each one. Why should I care or use this? I have the answers succinctly now.

It is also illuminating that the Copute syntax is darn slick, concise, and not confusing.

I am ecstatic with this accomplishment and milestone.

Note this file is still a little bit incomplete (has some incorrect code in some of the mixin implementations).

I have attached my latest draft of the Copute grammar which is incompete and driving towards improving on what is at the Copute.com website, as discussed here:

https://goldwetrust.forumotion.com/t112p165-computers#4426

Also, I have generalized the Traversable over the state-of-the-art in published research.

I am very satisfied with my explanation and code for the State monad, as well as the examples I gave for using it with ITraversable. Try and try and I doubt you will find any equivalently concise layman example implementations of State monad that are not in the obtuse Haskell syntax. Finally I can say that a common person can fully understand the State monad (and Traversable, Applicative, etc) without pulling their hair out for days and weeks (as I and many others have done).

Also I have now a sentence that begins with "Conceptually," at the start of the documentation for each of these (IFunctor, IApplicative, IMonad, State, ITraversable), which fully explains the significance of each one. Why should I care or use this? I have the answers succinctly now.

It is also illuminating that the Copute syntax is darn slick, concise, and not confusing.

I am ecstatic with this accomplishment and milestone.

Note this file is still a little bit incomplete (has some incorrect code in some of the mixin implementations).

I have attached my latest draft of the Copute grammar which is incompete and driving towards improving on what is at the Copute.com website, as discussed here:

https://goldwetrust.forumotion.com/t112p165-computers#4426

- Code:

interface IFunctor< Sub<T> : IFunctor<Sub,T>, T+ >

{

map<A> : (T -> A) -> Sub<A>

}

// Functional programming (FP) paradigms are fundamentally about not repeating oneself.

// Functor enables the reuse of all functions of type, T -> A, to which we give the name "morphism functions".

//

// A functor has a 'map' method, which enables all morphism functions of type, T -> A,

// to operate on functors of type, Sub<T>, producing a result of type, Sub<A>.

// Conceptually, a functor maps all functions of type, T -> A, to functions of type, Sub<T> -> Sub<A>

//

// This enables the reuse of these morphism functions, without requiring specialized versions of type, Sub<T> -> Sub<A>.

// For example, if List<T> is functor, then a function of type, Int -> String, can convert a List<Int> into a List<String>.

// There is no need to code a specialized morphism function of type, List<Int> -> List<String>.

//

// A functor has some computation, data structure, or other form of state wrapped around the instance(s) of any type T.

// Thus all functions between unwrapped types are automatically lifted to functions between the wrapped types.

//

// The parametrized subtype, Sub<T>, has a 'map' method which returns an instance of the subtype, Sub<A>,

// where the type parameter T has been converted to a type A.

//

// The 'map' method inputs a function, T -> A,

// i.e. that function inputs a type T and returns a type A.

//

// Note that since 'map' is a method then it inputs 'this', so when viewed as a function,

// it has the type, Sub<T> -> (T -> A) -> Sub<A>.

//

// For example, given the input parameter to 'map' is a function of type, Int -> String,

// i.e. that inputs an integer and returns a string,

// then a (subtype of IFunctor that is a) list of integers, List<Int>,

// is converted to a list of strings, List<String>.

//

// Mapping is between the same subtype, Sub, i.e. Sub<T> -> Sub<A> and not Sub<T> -> Sub2<A>.

// This is because the subtype may not always be invertible (bijective)

// to the wrapped parametric type, T. For example, since Maybe<T>.none contains no instance of T

// (i.e. it can't be inverted to an unwrapped T), it is conceptually sensible to map it to an empty List<A>.

// However, there is no consistent model that will enable such a mapping and

// hold true in all general cases of destination subtypes.

//

// In category theory, a functor is a model of COMPOSABLE mappings between categories.

// In our case, each category is a concrete realization of the type, Sub<T>,

// i.e. the type parameter T is concrete type.

// Thus, our functor has a mapping from category Sub<T> to Sub<A>, given the morphism function, T -> A.

// A functor follows the laws that insure it is composable:

// http://en.wikipedia.org/w/index.php?title=Functor&oldid=433685926#Definition