Computers:

5 posters

Page 8 of 11

Page 8 of 11 •  1, 2, 3 ... 7, 8, 9, 10, 11

1, 2, 3 ... 7, 8, 9, 10, 11

Copute's unique feature to maximize modularity and avoid LSP errors

Copute's unique feature to maximize modularity and avoid LSP errors

Prior discussion of Gilad Bracha's "Constructors are Harmful":

https://goldwetrust.forumotion.com/t112p135-computers#4191

The following makes some good points, and I applaud the clear explanations of the benefits of the pure lambda calculus.

However, OOP (subtyping) is not arbitrary, because it enables more deterministic partitioning (divide-and-conquer) of the design space, which can aid in the ability to reason about large design spaces (imagine the entire body of software being granularly reusable), as compared to (Haskell's or Scalaz's emulation using implicits) structural subtyping (ad-hoc polymorphism).

I am working on a granular approach to mixing the pure lambda calculus and subtyping.

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/

My followup on my above assertion:

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4534

Another of my followups:

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4535

https://goldwetrust.forumotion.com/t112p135-computers#4191

I have refined the solution since I wrote the above. Above I was proposing that static factories in an interface could have their return type parametrized to the implemented subtype class. That does not solve the problem. Static factories in an interface are necessary for other SPOT (single-point-of-truth) and boilerplate elimination reasons, e.g. see my implementation of 'wrap' (a/k/a 'unit') in an IMonad (IApplicative), and notice how much more elegant it is than the equivalent Scalaz. Note SPOT is also a critical requirement for maximizing modularity, i.e. ease of composition and reuse.

Rather to accomplish abstraction of constructors, we need is to nudge programmers to input factory functions, so that any code can be abstracted over another subtype of an 'interface' (i.e. instead of calling a 'class' constuctor directly, then input a factory function which returns the result of calling a constructor, thus the caller can change the subtype being constructed). So the important point is that we want to force programmers to create an 'interface'(s) for all their 'class' methods, which is accomplished by not allowing method implementation (i.e. 'class' nor 'mixin') to be referenced any where except in the 'inherits' declaration and constructor call. This means the type of an instance reference can not contain an identifier which is a 'class' nor 'mixin', thus forcing the type of all instance references to contain identifiers which are an 'interface', i.e. instance references reveal the abstract interface, but do not indicate the implementation.

So Copute will have a crucial difference from Scala and the other contenders (e.g. Ceylon), in that 'interface' and 'mixin' will be separated (not conflated in a 'trait'), and only 'interface' can appear in the type of instance references. Note that in Copute (just like for a 'trait' in Scala) 'mixin' and 'interface' may not have a constructor. Scala's linearised form of multiple inheritance is retained.

Note this unique feature of Copute (along with the elimination of virtual methods, a/k/a 'override') is also necessary to enforce the Liskov Substitution Principle (which relates to the concepts of covariance and contravariance):

(note some of the following specification is out-of-date with current specification in grammar and in my various notes around)

http://copute.com/dev/docs/Copute/ref/class.html#Virtual_Method

http://copute.com/dev/docs/Copute/ref/class.html#Inheritance

http://copute.com/dev/docs/Copute/ref/class.html#Static_Duck_Typing

http://copute.com/dev/docs/Copute/ref/function.html#Overloading

The following makes some good points, and I applaud the clear explanations of the benefits of the pure lambda calculus.

However, OOP (subtyping) is not arbitrary, because it enables more deterministic partitioning (divide-and-conquer) of the design space, which can aid in the ability to reason about large design spaces (imagine the entire body of software being granularly reusable), as compared to (Haskell's or Scalaz's emulation using implicits) structural subtyping (ad-hoc polymorphism).

I am working on a granular approach to mixing the pure lambda calculus and subtyping.

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/

On the utility of the FP approach

Referential transparency buys you modularity (the extent to which components of a program can be separated and recombined) and compositionality (the extent to which the whole program can be understood by understanding the components and the rules used to combine them).

Type systems also get more sophisticated to the extent that programs are pure. It’s easier to infer types for pure programs than it is for impure ones, for example. You also gain things like type constructor polymorphism (a.k.a. higher-kinded types) and higher-rank types. It’s my experience that in a sufficiently sophisticated program (such as a compiler for a programming language), you either use these abstractions or you repeat yourself.

Sophisticated type systems then in turn aid in program inference, which is the extent to which the computer (or the programmer with the aid of the computer) can infer the correct program from type information (think tab completion, only moreso). Program inference is currently an active area of research.

As a side-note a lot of OO folks are discovering the functional approach as a tool to aid in modular design. They call it “dependency injection”, “inversion of control”, “interpreter pattern” and the like.

A word on OO and the arbitrary

In OO, as commonly practiced, the choice of distinguished argument is arbitrary. Consider this function:

- Code:

K(x, y) = x

If we were to write this in OO style, then on which object, x or y, should the function K be dispatched? Should it be x.K(y), or y.K(x)? It’s arbitrary.

On “types of languages”

I want to get clear on some concepts. First the question of “types of programming languages”. I don’t think it’s helpful to divide programming languages into “functional”, “object-oriented”, “imperative”, etc. Sure, certain languages are better suited to certain styles of programming, but it’s important to differentiate on essentials and not mere aesthetics.

Functional programming is a restriction that the programmer puts on his programs. Having a language with a compiler that will warn you if you’re breaking referential transparency is helpful, but not essential. I do functional programming in Java, for example, which most people consider an “imperative OO” language.

My followup on my above assertion:

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4534

Another of my followups:

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4535

I think it is important to note that OOP and imperative programming are orthogonal concepts (the quote of Jason in the article appears to have conflated them):

http://en.wikipedia.org/wiki/Referential_transparency_(computer_science)#Contrast_to_imperative_programming

Imperative programming is the antithesis of referential transparency, in that the former must be evaluated in a specific order. Whereas, the OOP concepts encapsulation (i.e. information hiding), inheritance, interface, and polymorphism can be referentially transparent.

There is an equivalence (not a dichotomy) between FP and OOP in terms of the "Expression Problem" (Wadler), in that it can be solved by either adding new functions or case classes respectively.

So imperative programming is the "evil" which FP should be criticizing, not OOP.

Replace "equivalence" with "duality" (the mathematics definition, not the "dichotomy" definition where dichotomy means logical opposite complement or mutually exclusive contradiction). FP and OOP are not dichotomic paradigms (rather they are orthogonal, i.e. mutually independent, and can be mixed), and they are duals in terms of their equivalent ability to solve the "Expression Problem".

http://en.wikipedia.org/wiki/Duality_(mathematics)

Note, above I am referring the exclusive notion of FP, i.e. referential transparency. There is also an inclusive notion of FP which muddles definitions and understanding (and leads to conflation of terms):

http://www.artima.com/weblogs/viewpost.jsp?thread=166742

Also, replace "or case classes" with the more general "or subtypes".

http://beust.com/weblog2/archives/000490.html (read from Brian Slesinsky's comment down)

http://lambda-the-ultimate.org/node/2927#comment-43268

The point is the one I already made. Imperative is always the antithesis of side-effects-free. Pure (referentially transparent) is never imperative and vice-versa. They are dichotomies, i.e. mutually exclusive. If I am wrong, I would appreciate an example? (see discussion of your example below)

1) Unless I have misunderstood your description of your point-of-view, it seems you are referring to the inclusive notion, which may be more clear from reading #2 below.

2) Order-of-execution dependence (i.e. imperative) is not side-effects-free, and thus is not pure FP. If your sub-expressions are pure FP, but your composition of them is imperative (sequenced in an execution order), then the dichotomic paradigms composition is not pure (even though the sub-expressions are), i.e. they do not mix without choosing one of the contradictions. If the composition of the pure FP expressions is not execution order dependent, then it is pure and not imperative. Note, I assert that it is possible for a pure function to contain imperative composition which calls only pure functions (the rules I am working on have a few more requirements), thus the contradiction shifts back to pure (this is what I mean by my work on a granular mixing), yet while the function is pure, the internals of the function remain order-dependent and impure.

Referential transparency means the function call can be replaced with its cached return value, any where in the program it was called. It thus follows that order-of-execution does not matter, except in a trivial case. In pure FP, the hierarchy of function calls dictates an order-of-execution only where there is no reuse of a function, because the compiler is free to compute and cache values of a sub-function call before executing its caller, and to execute a pure function with different sets of arguments concurrently.

Rúnar said:

"Pure and imperative are orthogonal concepts"

Disagree, they are dichotomies, i.e. mutually exclusive (dependent because they are contradictory, can not be mixed without one overriding the other). Orthogonal means mutually inclusive (independent, freely intermixed without either paradigm contradicted).

Rúnar said:

"See the referentially transparent imperative program in the post."

I assume you are referring to where you wrote in the article, "We can also do “OO” in Haskell...".

a) Your example is using a monad (with 'do' syntactical sugar to obscure the nested 'bind' calls) to abstract side effects. Although abstraction is an "OO" concept, I assume your point is that purely functional can be written in an imperative style. Let's not conflate "OO" with imperative here, as OOP is orthogonal (not mutually exclusive) to pure FP, i.e. they can be intermixed without a contradiction. Whereas, imperative programming can not be mixed with FP (i.e. not mutually inclusive) without causing some level of the composition to be impure.

b) That example is imperative and not purely functional, because it has side-effects (e.g. printing to stdout). Yes, you can force Haskell to do impure imperative code.

Whereas, for example the State monad can be used to simulate global state in a purely functional (non-imperative) paradigm, i.e. only the pure functions in the composition that access the state are imperatively composed (order-dependent), and pure functions (that do not access the state) remain order-independent, when composed with the former using 'bind'. Here is an excerpt from my work on State monad which is not yet uploaded to my site.

// When exclusively not composing impure morphism functions that operate on state (i.e. that do not return a copy of modified state),

// the state monad composition of morphism functions (using 'bind' and 'map'),

// isolates the order-dependency drawback of global state to those pure morphism functions that operate on state,

// and any composed pure morphism functions, that do not input state, remain order-independent.

// Thus the state monad is a granular composition concurrent programming paradigm.

"This is an imperative program with no side-effects."

No, it is a pure program.

That is why I asked you what your definition of "imperatives" is, because it seems your eyes are fooled by the do syntactical sugar. The types of the pure functions determines whether they are composed purely or imperatively, not the ordering of the syntactical sugar. That composition flexibility is the beauty of the State monad, as I explained already.

"You talk a lot and say little."

I am thinking you did not yet have the epiphany, so you did not absorb what I wrote. Let me try to clarify below.

"I think you’re conflating “order-of-execution” with ordinary causal sequencing. In the expression f (g x), is it important that we execute g x before applying f to it?"

No, I covered that already in my prior reply. Concurrency in the pure lambda calculus is only possible when we reuse functions more than once. The key is that order is not forced when there is reuse, we can calculate the same function concurrently or in opposite order, e.g.

f( g x )( g y ) // or for those who prefer the notation f( g( x ), g( y ) )

"The sequencing isn’t imposed by any side-effects. It’s clear when you look at the type of (>>=) :: m a -> (a -> m b) -> m b. The second action requires the a from the first action (of type m a)"

You are confusing the purity of the functions being composed with the purity of the composition. Indeed the the types of the functions determines whether they are dependent on the prior 'bind' call, but some uses of a monad, a = b. For those cases, the composition is pure and not imperative.

As I said above, do not be fooled by the do syntactical sugar, the types of the composition control whether the composition is pure or imperative. Pure and imperative are opposites. Please try to understand.

Also I forgot to say that printing to the screen (twice) is order dependent. I think you agree there is order-dependence in the example in your article.

I am in rush here and I misstated about a = b. The only relevant point is that the types of the morphism function determine if the composition is pure or imperative. I will try to follow up with a clear example later..

Haha. Thanks.

Blame God for Godel's second incompleteness theorem for the inability to declare a consistent theory of the barrier between pure and imperative.

Pureness is relative (to lack of imperative), like everything else existential. Read Hume.

Impure composition of a pure API can render the order-independence within the pure code imperative relative to the external state. If I accepted your notion of imperative, then everything is imperative, and there is no such thing as pure code. Rather I place the barrier at the border between the pure API and the impure composition, which is deterministic relative to static typing.

You place the barrier for some arbitrary reason on pure monadic composition, which orders the monad state purely, which is not conceptually different than how normal function composition orders the final return value (which could also be considered a state and composed impurely).

I think I had a mistake. As long as the State monad is pure, the imperative code is not the monadic binding of functions that access the state, but rather any impure composition of the resultant state. The IO monad is obviously impure as soon as it calls a system function.

Continuation from the last post on prior page of this thread

Continuation from the last post on prior page of this thread

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4561

It was censored, but I have a copy here.

I read "Tackling the Awkward Squad" again, and it clearly says at the end of section 2 and 7, that where the IO is used, the program is imperative and impure. So I am not disagreeing with that research paper by Peyton-Jones, who is one of the principle creators of Haskell. He makes the point that IO should normally be at the top-level functions which call into a core of pure functions. And the point of the IO type is to delineate the impure, imperative portions of the code in a language that is otherwise always pure. So when these types are used, Haskell is not a 100% pure, declarative language. And that is a good thing. This is just Haskell's way of mixing pure and impure code.

The World state is not reversible in time, because it is impossible to cache the infinite states of the World for each discrete time (besides time is continuous which is another reason there are infinite states). Haskell does not allow the World in "IO a" to be reversed in time, i.e. all action functions occur in forward time order and thus the functions that input IO are imperative and no longer declarative. Thus, this restriction on order does make the action functions pure, because a pure function must be reversible, because irreversibly is a side-effect. For example, you write some output to the World, then you read some input which depends on the user having the output before making the input. Thus the output is a global side-effect in the World that impacts the input. Contrast this with functional reactive programming, which allows inputs and outputs to occur in any order, i.e. it is declarative and order-independent. Haskell can do pure functional reactive programming, and to interface a stateful world, every functional reactive program will need an impure, imperative "wrapper", which is what IO is.

See also my comments on this page, Pure Functional Language: Haskell

=========================

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4566

Ah I see he uncensored my posts above.

I want to share my impromptu thoughts about why pure FP might be considered declarative. Although there is a structure in function composition which implies a logical order-of-operations, the purity allows the order-of-execution to deviate without any impact on the final result. Structure with order-of-execution independence is one of the general characteristics of a declarative paradigm; whereas, the nature of an imperative program is that the final state depends on the order-of-execution, because the lack of purity means that function state transitions can not be isolated from each other. Seems to be a slam dunk against Rúnar's confusion of terms. Maybe he realized it too:

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4570

===========================

http://kawagner.blogspot.com/2007/02/why-monads-are-evil.html?showComment=1311410012629#c7474729809428588396

Shelby wrote:

It was censored, but I have a copy here.

Definitions:

'pure' = referentially transparent

'impure' = 'imperative' = not referentially transparent = referentially opaque

Rúnar said:In my view “imperative” = “monadic”

Thus, you are claiming that 'imperative' = all pure function composition, h : (a -> b) -> (b -> c) -> a -> c; h f g x = f( g x ). Thus you are claiming that 'imperative' is not distinguishable from pure FP.

The proof for the state monad, is the morphism function m : a -> State s b; m t = \x -> g (t,x), may call a function g : (a,s) -> (b,s). The monadic binding is pure function composition on the tuple, e.g. f( g (a,x) ).

That the ordering of pure function composition can produce a final result of type c or (c,s), is irrelevant to the concept of 'imperative'.No it isn’t.

My initial succinct comment was correct, 'imperative' is the antithesis of purity-- there is no other definition that gives imperative any meaning. My followup reply correctly predicted that your definition of imperative is muddled.

My further verbose replies were an attempt to try to understand how you could distinquish some forms of ordering of pure functional composition from others. I was trying to understand and explain what significance you could apply to the abstraction of a monad. The monad abstracts (wraps) some state or computation, so that it is possible to compose functions which map unwrapped types, a -> b, with those that map wrapped types, a -> m a. I explained that for example for the state monad, the composed functions of type, a -> b, do not operate on the state, thus they certainly can't be 'imperative' relative to the state. And as I proved above, the composition of functions of type, a -> State s b, is also pure function composition returning a tuple. You try to make a distinction that monadic ordering determines the result tuple, but the ordering of any pure function composition determines the result. There is no distinction.

The bottom line is that 'imperative' is any function that is not referentially transparent. Period. No corner cases, no muddled definition that has no meaning.

In my next comment, I will explain why the IO monad is impure and thus 'imperative', even in Haskell.

The IO monadic composition in Haskell is claimed[1] to be pure because the World (as abstracted by IO a), and not an instance of type a, is an input and output for each action function, i.e. it is assumed that for a given state of the potentially infinite state-machine in the World, the same World state will always be returned[2]. The problem here is that the World state includes time, and thus it never has the same state. This is what I was referring to when I mentioned Coase's and Godel's theorems. The lambda calculus requires the function to be beta-reducible[3], but the structure of the World is never considered by the Haskell type checker[4][5]. Thus this is a hack to claim[1] that side-effects don't matter to purity, by assuming that the World input type of the of IO action function is computable, but never having the compiler check its infinite structure. In short, the World's structure is uncomputable, which relates to undecidability and the Halting theorem.

So the IO monad is actually impure (i.e. 'imperative') and Haskell is lying about it[5]. There is analogy to virtual methods, which at compile-time comply with the variance of Liskov Substitution Principle for the method parameters and return types, but fail at run-time the pre- and post-condition behavioral semantics of LSP (which is unchecked by most languages). Scala makes it easier to lie about purity, because its type system does not even check for purity, so any kernel function that interacts with the world, is impure.

For a language that allows the declaration of a function's type to include whether it is pure or not (e.g. Copute), as I previously stated, the barrier between 'imperative' (i.e. 'impure') and 'pure' is the determined by the type of the function (the API).

[1] - [5] The links for the footnotes follow in the next reply.

[1] Section 7, Tackling the Awkward Squad, Peyton-Jones, "this is an important caveat...I have not proved it"

[2] Section 2.2, Tackling the Awkward Squad, Peyton-Jones

[3] http://en.wikipedia.org/w/index.php?title=Lambda_calculus&oldid=433678424#Computable_functions_and_lambda_calculus

[4] http://www.reddit.com/r/haskell/comments/8bhir/why_the_io_monad_isnt_a_dirty_hack/c08s5bc

[5] http://kawagner.blogspot.com/2007/02/why-monads-are-evil.html?showComment=1170781260000#c2061819858844484763

This is my final post to this blog page. I hope this helps you see the light.

I read "Tackling the Awkward Squad" again, and it clearly says at the end of section 2 and 7, that where the IO is used, the program is imperative and impure. So I am not disagreeing with that research paper by Peyton-Jones, who is one of the principle creators of Haskell. He makes the point that IO should normally be at the top-level functions which call into a core of pure functions. And the point of the IO type is to delineate the impure, imperative portions of the code in a language that is otherwise always pure. So when these types are used, Haskell is not a 100% pure, declarative language. And that is a good thing. This is just Haskell's way of mixing pure and impure code.

The World state is not reversible in time, because it is impossible to cache the infinite states of the World for each discrete time (besides time is continuous which is another reason there are infinite states). Haskell does not allow the World in "IO a" to be reversed in time, i.e. all action functions occur in forward time order and thus the functions that input IO are imperative and no longer declarative. Thus, this restriction on order does make the action functions pure, because a pure function must be reversible, because irreversibly is a side-effect. For example, you write some output to the World, then you read some input which depends on the user having the output before making the input. Thus the output is a global side-effect in the World that impacts the input. Contrast this with functional reactive programming, which allows inputs and outputs to occur in any order, i.e. it is declarative and order-independent. Haskell can do pure functional reactive programming, and to interface a stateful world, every functional reactive program will need an impure, imperative "wrapper", which is what IO is.

See also my comments on this page, Pure Functional Language: Haskell

=========================

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4566

Okay since you censored my last 2 posts, then I will send you this message via your blog, since I can not seem to find your contact email address any where.

Rúnar wrote:

"Imperative programming is just Kleisli composition"

John Hughes apparently says you are wrong. I assume you know he helped create Haskell, and is very prominent in the field of FP:

http://www.cse.chalmers.se/~rjmh/afp-arrows.pdf

Section 1.3 Arrows as Computations, Programming with Arrows

Hughes wrote:

"monads..., help to structure purely functional code, with not an imperative operation in sight"

Note I am publishing all of the censored comments to the web, including those censored by Tony Morris over at his blog. And I am aware of your remark at Twitter:

http://twitter.com/#!/runarorama/status/84026046692851712

"Feeding a known troll: http://t.co/2ezUkL8 "

Enjoy the bliss.

Ah I see he uncensored my posts above.

Rúnar, thank you for uncensoring my 2 posts.

Let me try to close our discussion amicably.The noise-to-signal ratio in your comments exceeds my tolerance.

You added that. My verbose middle comments contained some errors (perhaps due to exhaustion made after midnight at end of 18 hour work day in an Asian internet cafe with multiple patrons simultaneously loudly singing different songs for hours, also fighting an amoeba taking flagyl, and I have 1 eye). The last 4 comments were written after I got some limited sleep (woken by a noisy parade).

I just realized why we were talking past each other.

Imperative means non-declarative, e.g. no "control flow". The ambiguity is in the definition of "control flow". A pure function creates a state change by creating new state, and not changing the input state. Thus, apparently some claim that pure FP is declarative, thus impurity is (a subset of) imperative.

I could accept that definition. Alternatively, I already implied early in our discussion that I could agree that all non-declarative programming is imperative.

Rúnar, are you saying that only Kleisli composition is imperative? Or, are we in agreement that monadic composition generalizes to normal pure function composition, and thus all pure function composition would be imperative, where you said "state transition" (i.e. state creation) is imperative?

Pure function composition is ordered and causes state creation ("transition") whether it be in a monad, Kleisli, or otherwise. Your apparent (?) distinction on monadic and Kleisli lead me to believe that you were saying only some FP composition is imperative.

For novice readers, the Kleisli arrow is the function, a -> m b. The arrow "->" is the general function. A function is either impure or pure, thus either changes or creates state respectively. Some forms of declarative programming (e.g. HTML, CSS, etc) is stateless, and (if I remember correctly) this is possible because they are not Turing complete.

I want to share my impromptu thoughts about why pure FP might be considered declarative. Although there is a structure in function composition which implies a logical order-of-operations, the purity allows the order-of-execution to deviate without any impact on the final result. Structure with order-of-execution independence is one of the general characteristics of a declarative paradigm; whereas, the nature of an imperative program is that the final state depends on the order-of-execution, because the lack of purity means that function state transitions can not be isolated from each other. Seems to be a slam dunk against Rúnar's confusion of terms. Maybe he realized it too:

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4570

===========================

http://kawagner.blogspot.com/2007/02/why-monads-are-evil.html?showComment=1311410012629#c7474729809428588396

Shelby wrote:

I have refined my understand, which is explained at the following link:

https://goldwetrust.forumotion.com/t112p180-computers#4434

As Peyton-Jones elucidates in "Tackling the Awkward Squad", IO Monad is simply (one of) Haskell's way(s) of mixing impure, imperative code with pure, declarative code. To the extent that you can make your interface to the world declarative, i.e. pure functional (reactive), then you don't use IO Monad. But at least until the world (i.e. the OS) becomes pure functional, then we need a "wrapper" on our pure functional core-- which is IO Monad.

I gained clarity in the summary of my current open source project, which goes into more detail on this issue:

http://Copute.com

Last edited by Shelby on Fri Dec 09, 2011 10:02 pm; edited 11 times in total

Software engineering is a living organism (why we need Copute!)

Software engineering is a living organism (why we need Copute!)

http://elegantcode.com/2011/06/22/why-software-development-will-never-be-engineering/

The analogy was to why online poker skills accelerated in past 5 years, was due to more interaction and human involvement, i.e. lower the barriers to COOPeration (note I also own COOPute.com). This is exactly what I am trying to do for software evolution by creating Copute, with its economic and technical models.

I replied to a comment:

http://elegantcode.com/2011/06/22/why-software-development-will-never-be-engineering/#comment-234084180

http://esr.ibiblio.org/?p=3345#comment-311334

Exactly why I am creating Copute. The maximum division-of-labor and thus specialization is only possible if code is more reusable and smaller orthogonal modules, so that programmers can specialize and have their efforts reused in different problem domains.

P.S. Daniel's Biblical "travel & knowledge will increase" pops up again, in this online poker (increasing travel virtually) to knowledge progress analogy.

The nature of software development, [...], leads itself to rapid evolution.

Consider what direction software development is evolving. Is it even evolving in the direction of rigid engineering practices or is it evolving in the exact OPPOSITE direction?

Ten years ago, we tried to use UML diagrams and CASE tools to develop software. Ten years ago waterfall was all the rage. Ten years ago, we thought that ten years in the future we would have programs that would allow us to build software in the same way that CAD tools allow for building machine parts.

Not only did it not happen. It went completely the other way. Now we are all talking about Agile. Now people don’t even remember what CASE tools are. Now we are building software without trying to define the whole system in UML diagrams.

The fact of the matter is software systems are unruly beasts!

The analogy was to why online poker skills accelerated in past 5 years, was due to more interaction and human involvement, i.e. lower the barriers to COOPeration (note I also own COOPute.com). This is exactly what I am trying to do for software evolution by creating Copute, with its economic and technical models.

I replied to a comment:

http://elegantcode.com/2011/06/22/why-software-development-will-never-be-engineering/#comment-234084180

The difference between software and the brigde builder is that you can calculate if a bridge is stable and can support the weight and withstand the wind... I never could prove a piece of software to be correct with mathmatics. Just by using it and see if it didn't cause errors.

There are formal semantics for software in some languages, but your point is valid in the sense that the number of variables that have to be fit in many software, are greater and more importantly they are changing more rapidly. So it is not that we don't have equational foundations increasingly used in practical software engineering at least by those on leading edge of software engineering (e.g. category theory, monads, programming paradigms, agile practices, agile enforcing compilers, formal logic, pure lambda calculus and the referentially transparency, behavioral static typing, etc), it is that the dynamic complexity of variables in software is insatiable, because the better our software, then the more variables of demand are created by the new possibilities revealed. Software is unique from other engineering disciplines in that it is applicable to all of them.

Software is the encoding of human knowledge, which is always progressing.

There are some important equational foundations that we need to popularize so that competitive cooperation on software can accelerate.

http://esr.ibiblio.org/?p=3345#comment-311334

There’s something that many commenters have alluded to, but didn’t come out directly and say:

An engineer will specialize and become expert in some field, like bridge-building. The days when an engineer like Brunel could design bridges and ships and… are long gone.

Software developers know how to develop software, but they come to a big project with no knowlege of the problem domain. They have to learn that on-the-fly, gaining experience by making mistakes. Still worse, the people who commissioned the project in the first place don’t know what they really want, and find problems communicating with the software people. This is much harder than telling an engineering firm that you need a bridge from here to there, to carry so much traffic, and they provide a solution.

OTOH, the above is not all bad. Software’s inherent flexibility allows the business to explore solutions that they might otherwise not be aware of (if they have the nerve to keep funding the project). Trying out a suspension bridge, then a cantilever, then a simple truss design by building them all wouldn’t fly in the engineering world; the engineer would be expected to do that on paper and propose the best one.

Exactly why I am creating Copute. The maximum division-of-labor and thus specialization is only possible if code is more reusable and smaller orthogonal modules, so that programmers can specialize and have their efforts reused in different problem domains.

P.S. Daniel's Biblical "travel & knowledge will increase" pops up again, in this online poker (increasing travel virtually) to knowledge progress analogy.

Working on a new Introduction to Copute (also technical rationale)

Working on a new Introduction to Copute (also technical rationale)

Copute is a new computer language which aims to correct the mistakes and shortcomings in other programming languages, which have limited the scale at which software can be built from reusable modules.

Fitness

Degrees-of-freedom (DOF) dictates how well a system can adapt to cooperate and fit to a desired solution. For example, there would be gaps (i.e. errors in fitness) between a bicycle chain and the curved shape it wraps around, because the chain can only bend at the links. Employing instead a solid, but flexible metal strip, the metal would remain fit to the curve only with a sustained force-- the resisting force being a reduced DOF and an error in fitness. Permanent bending reduces DOF for wrapping to different curves.

Google can't even find a wise proverb, "if you use your power, then you lose it". The equivalent form is probably popular because it is misunderstood, "absolute power corrupts absolutely". The utility of power exists only because there are resistance forces. This generative fact applies to everything. For example, the power vacuum that gives rise to central authority is due to the vested interests, bickering, selfishness, and complacency (laziness) of the governed.

The proof is given by the well established fundamental physics equations. Power is the rate at which work can be completed. Power requires energy to produce work, but the more work that is performed, the greater the potential energy available to undo the work. This type of potential energy is due to the resistance forces encountered during the work to produce a particular configuration of the subject matter.[1] For example, a compressed spring wants to push back and undo the work.

So if our goal is to get more configurations of in our system with less work, then we need to reduce these resistance forces, i.e. increase the DOF. Think of springs opposing movement in numerous directions, and we want to remove them. Thus, if we succeed to increase DOF, it takes less work to produce our desired diversity of configurations, thus less power to produce them faster. And the configuration of the subject matter which results from our work, thus decays (i.e. becomes unfit slower), because the resistance forces are smaller. Requiring less power (and work), to produce more of what we want and faster, with a greater longevity, is thus more powerful (efficient) and explains the wisdom of the forgotten proverb.

Knowledge

Communication redundance (amplitude) is a form of power, because its utility exists due to the resistance to comprehension, i.e. due to noise mixed with the signal. The signal-to-noise ratio (SNR) depends on the DOF of both the sender and the receiver.

The difference between signal and noise, is the mutual comprehension (i.e. resonance) between the sender and the receiver, i.e. noise can become a signal or vice versa, depending on the coupling. In physics, resonance is the lack of resistance to the change in a particular configuration of the subject matter, i.e. each resonator is a DOF.[2] DOF is the number of potential orthogonal (independent) configurations, i.e. the ability to obtain a configuration without impacting the ability to obtain another configuration. In short, DOF are the configurations that don't have dependencies on each other.

Thus increasing the number of independent configurations in any system, makes the system more powerful, requiring less energy, work, and power, to obtain diversity within the system. The second law of thermodynamics says that the universe is trending to maximum entropy, i.e. the maximum independent configurations. Entropy (disorder) is a measure of the relative number of independent possibilities. So now we see why Coase's theorem holds, that the universe is trending towards the maximum free market, and any cost barrier will fail eventually. This is why small things grow faster, because large things reduce the number of independent configurations and thus require exponentially more power to grow. Thus in the point-of-view of change and the future, small things are exponentially more powerful than large things. The bell curve exists because the minority is exponentially more efficient (more DOF), and because a perfectly equal distribution would require infinite DOF and the end of the universe's trend to maximum disorder. Large things stagnate, rot, collapse, and disappear.

Knowledge is correlated to the DOF, because in every definition of knowledge one can think of, an increase in knowledge is an increase in DOF and vice versa. Software is unique among the engineering disciplines in that it is applicable to all of them. Software is the process of increasing knowledge. Thus one of the most critical characteristics of software is that it does not want to be static, and that the larger the components, thus the fewer the DOF, the less powerful (efficient) the software process.

Copute attempts to apply these concepts to software, by increasing the independence (orthogonality) of software components, so they can be composed into diverse configurations with less work. Independent programmers can leverage independent components with less communication and refactoring work, thus Copute is about increasing cooperation.

Below we will outline the major features of Copute which enable independent software components.

Pure Functional Programming

Pure functional programming (PFP) is fundamentally about not repeating oneself, i.e. the ability to compose (small) functions more freely, because it has following features:

PFP is never imperative, and is always declarative. In general, declarative languages (e.g. HTML, CSS) express the logic of the system, without forcing a particular order-of-execution. Declarative is one of the features required for concurrency. Due to purity, each the state transition for each function in PFP is independent (orthogonal) to all the others and to the order-of-execution, thus do not inhibit DOF as imperative programming does. Some people mistakenly state that PFP is imperative, but these are cases where a particular language compiler fudges (e.g. IO monad in Haskell[3]) or does not check the purity of a construct, to give apparency of purity within the semantics of the type system of the language, but is imperative in the semantics of state external to that type system.

PFP is mutually inclusive to OOP, not mutually exclusive. PFP and OOP are duals in that they each can be used to solve the Expression Problem for maximum DOF.[4] And category theory reveals that PFP and OOP are composable as follows.

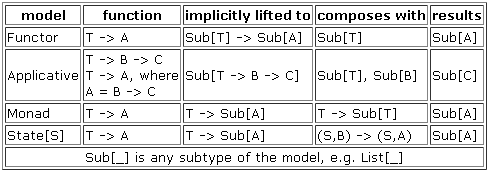

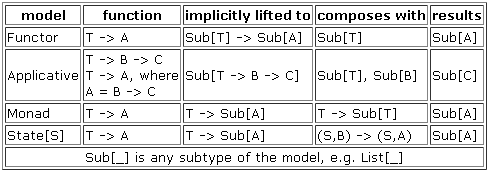

Although the DOF benefits derive from math, the composition benefits of PFP are intuitive because every programmer understands how to do composition of functions. The (category theory models of) higher-level object subtypes (OOP), can be composed with ordinary functions-- functions which input type T and return type A-- without having to write specialized versions of these functions for each possible subtype we create. The following OOP models are simply composing as if they are (and thus resonating with) ordinary functions. Read the Copute documentation for each of the following models to aid in understanding the following table.

Object Oriented Programming

Copute builds on several critical Scala innovations, such as higher-kinded generics, generic type parameter variance annotations, multiple inheritance of interface and implementation, and pattern matching with abstract data types (case classes). Copute adds a few critical refinements to the single-point-of-truth (SPOT), which drastically improves the DOF, conciseness, and intuitive understanding.

TODO: discuss single-point-of-truth. Also discuss application of granular purity to OOP.

[1] http://en.wikipedia.org/w/index.php?title=Energy&oldid=435292864

[2] http://en.wikipedia.org/w/index.php?title=Resonance&oldid=432632299#Resonators

http://en.wikipedia.org/w/index.php?title=Resonance&oldid=432632299#Mechanical_and_acoustic_resonance

[3] https://goldwetrust.forumotion.com/t112p180-computers#4434

[4] http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4535

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4537

Fitness

Degrees-of-freedom (DOF) dictates how well a system can adapt to cooperate and fit to a desired solution. For example, there would be gaps (i.e. errors in fitness) between a bicycle chain and the curved shape it wraps around, because the chain can only bend at the links. Employing instead a solid, but flexible metal strip, the metal would remain fit to the curve only with a sustained force-- the resisting force being a reduced DOF and an error in fitness. Permanent bending reduces DOF for wrapping to different curves.

Google can't even find a wise proverb, "if you use your power, then you lose it". The equivalent form is probably popular because it is misunderstood, "absolute power corrupts absolutely". The utility of power exists only because there are resistance forces. This generative fact applies to everything. For example, the power vacuum that gives rise to central authority is due to the vested interests, bickering, selfishness, and complacency (laziness) of the governed.

The proof is given by the well established fundamental physics equations. Power is the rate at which work can be completed. Power requires energy to produce work, but the more work that is performed, the greater the potential energy available to undo the work. This type of potential energy is due to the resistance forces encountered during the work to produce a particular configuration of the subject matter.[1] For example, a compressed spring wants to push back and undo the work.

So if our goal is to get more configurations of in our system with less work, then we need to reduce these resistance forces, i.e. increase the DOF. Think of springs opposing movement in numerous directions, and we want to remove them. Thus, if we succeed to increase DOF, it takes less work to produce our desired diversity of configurations, thus less power to produce them faster. And the configuration of the subject matter which results from our work, thus decays (i.e. becomes unfit slower), because the resistance forces are smaller. Requiring less power (and work), to produce more of what we want and faster, with a greater longevity, is thus more powerful (efficient) and explains the wisdom of the forgotten proverb.

Knowledge

Communication redundance (amplitude) is a form of power, because its utility exists due to the resistance to comprehension, i.e. due to noise mixed with the signal. The signal-to-noise ratio (SNR) depends on the DOF of both the sender and the receiver.

The difference between signal and noise, is the mutual comprehension (i.e. resonance) between the sender and the receiver, i.e. noise can become a signal or vice versa, depending on the coupling. In physics, resonance is the lack of resistance to the change in a particular configuration of the subject matter, i.e. each resonator is a DOF.[2] DOF is the number of potential orthogonal (independent) configurations, i.e. the ability to obtain a configuration without impacting the ability to obtain another configuration. In short, DOF are the configurations that don't have dependencies on each other.

Thus increasing the number of independent configurations in any system, makes the system more powerful, requiring less energy, work, and power, to obtain diversity within the system. The second law of thermodynamics says that the universe is trending to maximum entropy, i.e. the maximum independent configurations. Entropy (disorder) is a measure of the relative number of independent possibilities. So now we see why Coase's theorem holds, that the universe is trending towards the maximum free market, and any cost barrier will fail eventually. This is why small things grow faster, because large things reduce the number of independent configurations and thus require exponentially more power to grow. Thus in the point-of-view of change and the future, small things are exponentially more powerful than large things. The bell curve exists because the minority is exponentially more efficient (more DOF), and because a perfectly equal distribution would require infinite DOF and the end of the universe's trend to maximum disorder. Large things stagnate, rot, collapse, and disappear.

Knowledge is correlated to the DOF, because in every definition of knowledge one can think of, an increase in knowledge is an increase in DOF and vice versa. Software is unique among the engineering disciplines in that it is applicable to all of them. Software is the process of increasing knowledge. Thus one of the most critical characteristics of software is that it does not want to be static, and that the larger the components, thus the fewer the DOF, the less powerful (efficient) the software process.

Copute attempts to apply these concepts to software, by increasing the independence (orthogonality) of software components, so they can be composed into diverse configurations with less work. Independent programmers can leverage independent components with less communication and refactoring work, thus Copute is about increasing cooperation.

Below we will outline the major features of Copute which enable independent software components.

Pure Functional Programming

Pure functional programming (PFP) is fundamentally about not repeating oneself, i.e. the ability to compose (small) functions more freely, because it has following features:

- Pure: for each value for the input, a function can be replaced by a previously cached value of the result, i.e. the function doesn't depend on any external state.

- Higher-order: a function can be a value.

- Curried: a new function can can be created by assigning a constant value to an input of an existing function.

- Declarative: function composition expresses logical order-of-operations, but due to the replacement principle for purity, the order-of-execution is independent.

- Mathematical: lambda calculus is PFP, which allows us to use category theory to maximize DOF.

PFP is never imperative, and is always declarative. In general, declarative languages (e.g. HTML, CSS) express the logic of the system, without forcing a particular order-of-execution. Declarative is one of the features required for concurrency. Due to purity, each the state transition for each function in PFP is independent (orthogonal) to all the others and to the order-of-execution, thus do not inhibit DOF as imperative programming does. Some people mistakenly state that PFP is imperative, but these are cases where a particular language compiler fudges (e.g. IO monad in Haskell[3]) or does not check the purity of a construct, to give apparency of purity within the semantics of the type system of the language, but is imperative in the semantics of state external to that type system.

PFP is mutually inclusive to OOP, not mutually exclusive. PFP and OOP are duals in that they each can be used to solve the Expression Problem for maximum DOF.[4] And category theory reveals that PFP and OOP are composable as follows.

Although the DOF benefits derive from math, the composition benefits of PFP are intuitive because every programmer understands how to do composition of functions. The (category theory models of) higher-level object subtypes (OOP), can be composed with ordinary functions-- functions which input type T and return type A-- without having to write specialized versions of these functions for each possible subtype we create. The following OOP models are simply composing as if they are (and thus resonating with) ordinary functions. Read the Copute documentation for each of the following models to aid in understanding the following table.

Object Oriented Programming

Copute builds on several critical Scala innovations, such as higher-kinded generics, generic type parameter variance annotations, multiple inheritance of interface and implementation, and pattern matching with abstract data types (case classes). Copute adds a few critical refinements to the single-point-of-truth (SPOT), which drastically improves the DOF, conciseness, and intuitive understanding.

- Purity

- Unreferencable implementation

- No virtual re-implementation

- Static methods instead of implicits

- Disobscurity: e.g. one way to write a function instead of 6, no methods as operators, no.

- No exceptions

TODO: discuss single-point-of-truth. Also discuss application of granular purity to OOP.

[1] http://en.wikipedia.org/w/index.php?title=Energy&oldid=435292864

Stored energy is created whenever a particle has been moved through a field it interacts with (requiring a force to do so), but the energy to accomplish this is stored as a new position of the particles in the field—a configuration that must be "held" or fixed by a different type of force (otherwise, the new configuration would resolve itself by the field pushing or pulling the particle back toward its previous position). This type of energy "stored" by force-fields and particles that have been forced into a new physical configuration in the field by doing work on them by another system, is referred to as potential energy. A simple example of potential energy is the work needed to lift an object in a gravity field, up to a support.

[2] http://en.wikipedia.org/w/index.php?title=Resonance&oldid=432632299#Resonators

A physical system can have as many resonant frequencies as it has degrees of freedom.

http://en.wikipedia.org/w/index.php?title=Resonance&oldid=432632299#Mechanical_and_acoustic_resonance

Mechanical resonance is the tendency of a mechanical system to absorb more energy [i.e. less resistance] when the frequency of its oscillations [i.e. change in configuration] matches the system's natural frequency of vibration [i.e. natural change in configuration] than it does at other frequencies.

[3] https://goldwetrust.forumotion.com/t112p180-computers#4434

[4] http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4535

http://apocalisp.wordpress.com/2011/05/30/imperative-vs-functional-programming/#comment-4537

Last edited by Shelby on Mon Jul 25, 2011 10:07 am; edited 4 times in total

Efficiency of purely functional programming

Efficiency of purely functional programming

http://twitter.com/#!/kmett/status/74517454973444096

http://stackoverflow.com/questions/1990464/efficiency-of-purely-functional-programming

Array access explains the logarithmic cost factor:

http://stackoverflow.com/questions/1255018/n-queens-in-haskell-without-list-traversal/1268285#1268285

I'm becoming skeptical about the claim that pure functional is generally log n slower:

https://goldwetrust.forumotion.com/t112p195-computers#4537

Discussion of tradeoffs for lazy programming:

https://goldwetrust.forumotion.com/t112p165-computers#4430

Going immutable in a strict language can cost you a logarithmic factor, but you get free persistence http://bit.ly/6T6lfr

http://stackoverflow.com/questions/1990464/efficiency-of-purely-functional-programming

Array access explains the logarithmic cost factor:

http://stackoverflow.com/questions/1255018/n-queens-in-haskell-without-list-traversal/1268285#1268285

I'm becoming skeptical about the claim that pure functional is generally log n slower:

https://goldwetrust.forumotion.com/t112p195-computers#4537

Discussion of tradeoffs for lazy programming:

https://goldwetrust.forumotion.com/t112p165-computers#4430

Last edited by Shelby on Thu Aug 25, 2011 5:58 pm; edited 3 times in total

BitCoin is socialist and not (and no online curency can be) anonymous

BitCoin is socialist and not (and no online curency can be) anonymous

http://www.bitcoin.org/

The fundamental problem is that any online currency either requires a centralized trust authority which is subject to govt regulation, or a majority vote of decentralized authorities which is just socialism and the antithesis of what makes gold and silver money (he who holds the gold makes the rules, no third party authority or majority vote). I had come to this conclusion a long time ago in the Precious Metals forum during my analysis of whether an online currency (potentially backed by gold and silver) could survive govt retribution.

BitCoin can be defeated by a hacker who puts a virus on a majority of computers using BitCoin, or a govt legislation that requires every computer sold to run a firmware program that defeats it (even if a minority will remove the firmware illicitly):

http://forum.bitcoin.org/index.php?topic=12435.msg172811#msg172811

Thus the BitCoin algorithm has an attack vector, which encourages govt regulation of our computers.

No anonymity on the internet:

http://en.wikipedia.org/wiki/Bitcoin#Anonymity

============================

I should also be frank with my friends.

Spoken like a good collectivist:

I suppose you didn't realize that you were also outlawing the 2nd law of thermodynamics too. But I am not going to explain that one to you, because you will NEVER get it.

Btw, Bitcoin is vulnerable to attack by a government law which says every computer must contain a chip which the government can use to hijack the computer for purposes of regulating peer-to-peer protocols. This is because for money to gain enough supply to be viable, the protocol has to be fairly well known and static.

The fundamental problem is that any online currency either requires a centralized trust authority which is subject to govt regulation, or a majority vote of decentralized authorities which is just socialism and the antithesis of what makes gold and silver money (he who holds the gold makes the rules, no third party authority or majority vote). I had come to this conclusion a long time ago in the Precious Metals forum during my analysis of whether an online currency (potentially backed by gold and silver) could survive govt retribution.

BitCoin can be defeated by a hacker who puts a virus on a majority of computers using BitCoin, or a govt legislation that requires every computer sold to run a firmware program that defeats it (even if a minority will remove the firmware illicitly):

http://forum.bitcoin.org/index.php?topic=12435.msg172811#msg172811

Thus the BitCoin algorithm has an attack vector, which encourages govt regulation of our computers.

No anonymity on the internet:

http://en.wikipedia.org/wiki/Bitcoin#Anonymity

============================

I should also be frank with my friends.

...at FinancialSense.com. The topic of Bitcoin, helped me find direction for it.

Spoken like a good collectivist:

Government debt should be settled, and outlawed going forward. A balanced budget amendment to the Constitution should be adopted.

I suppose you didn't realize that you were also outlawing the 2nd law of thermodynamics too. But I am not going to explain that one to you, because you will NEVER get it.

Btw, Bitcoin is vulnerable to attack by a government law which says every computer must contain a chip which the government can use to hijack the computer for purposes of regulating peer-to-peer protocols. This is because for money to gain enough supply to be viable, the protocol has to be fairly well known and static.

Last edited by Shelby on Tue Sep 20, 2011 11:15 pm; edited 2 times in total

Your neighborly Google, whose slogan is "Do no evil"

Your neighborly Google, whose slogan is "Do no evil"

Your neighborly Google, whose slogan is "Do no evil":

http://www.stuff.co.nz/technology/digital-living/5217945/Google-Wi-Fi-snooping-lawsuits-can-proceed

http://www.stuff.co.nz/technology/digital-living/5217945/Google-Wi-Fi-snooping-lawsuits-can-proceed

Computers create heat and no potential energy

Computers create heat and no potential energy

Note this applies to the hardware perspective, while in the software dimension, there is potential energy created (which is why software decays over time relative to the changing applications).

http://www.fibertown.com/pdf/uptimeinstituteheatdensitytrends.pdf

So this means that the work being done is not creating potential energy (sustained resistance forces), but rather permanently bending the structures of resistance, thus releasing heat (heat is disordered matter, that is not useful, i.e. not "signal"). See the quotes of what I had previously about DOF. Remember that "work" is noise, and the information transmitted (resonated between sender & receiver) is the "signal". We want to eliminate "work" and increase information.

In a computer, these bending resistance forces are stored due to capacitance, i.e. the ability to store a charge of electrons with an insulator, and the fact that a transistor is a variable resistor (insulator) controlled by a charge applied to it. These resistance forces are reduced by making the transistors smaller, which is what causes Moore's Law (we can double the # of transistors on a silicon die every 18 months).

My previous treatise on physics and information:

https://goldwetrust.forumotion.com/t112p180-computers#4436

http://www.fibertown.com/pdf/uptimeinstituteheatdensitytrends.pdf

Power consumed by computer and communication products is totally converted to heat.

So this means that the work being done is not creating potential energy (sustained resistance forces), but rather permanently bending the structures of resistance, thus releasing heat (heat is disordered matter, that is not useful, i.e. not "signal"). See the quotes of what I had previously about DOF. Remember that "work" is noise, and the information transmitted (resonated between sender & receiver) is the "signal". We want to eliminate "work" and increase information.

In a computer, these bending resistance forces are stored due to capacitance, i.e. the ability to store a charge of electrons with an insulator, and the fact that a transistor is a variable resistor (insulator) controlled by a charge applied to it. These resistance forces are reduced by making the transistors smaller, which is what causes Moore's Law (we can double the # of transistors on a silicon die every 18 months).

My previous treatise on physics and information:

https://goldwetrust.forumotion.com/t112p180-computers#4436

Shelby wrote:[...]

Employing instead a solid, but flexible metal strip, the metal would remain fit to the curve only with a sustained force-- the resisting force being a reduced DOF and an error in fitness. Permanent bending reduces DOF for wrapping to different curve

[...]

The proof is given by the well established fundamental physics equations. Power is the rate at which work can be completed. Power requires energy to produce work, but the more work that is performed, the greater the potential energy available to undo the work. This type of potential energy is due to the resistance forces encountered during the work to produce a particular configuration of the subject matter.[1] For example, a compressed spring wants to push back and undo the work.

So if our goal is to get more configurations of in our system with less work, then we need to reduce these resistance forces, i.e. increase the DOF. Think of springs opposing movement in numerous directions, and we want to remove them. Thus, if we succeed to increase DOF, it takes less work to produce our desired diversity of configurations, thus less power to produce them faster. And the configuration of the subject matter which results from our work, thus decays (i.e. becomes unfit slower), because the resistance forces are smaller. Requiring less power (and work), to produce more of what we want and faster, with a greater longevity, is thus more powerful (efficient) [...]

Versioning, forking, bug fixes, etc... in Copute.com

Versioning, forking, bug fixes, etc... in Copute.com

THIS IS NO LONGER THE MODEL.

Please note that this is a thought experiment and it may not be implemented for Copute. There is ongoing thinking on how to obtain the best free market pricing model. Preferably it should be entirely decentralized.

Some private writings solved most of the issues in the Copute.com economic model. The remaining issue is modifications to existing modules.

Summary of Economic Model

First a brief summary of prior two posts. Copute will be a new open source (free) computer language that fosters reusability of modules of programming (to finer granularity of module size than with other languages). Any one can use Copute for free, even modify it, and there is no requirement to use the Copute.com marketplace when using the Copute language. In addition, Copute.com will offer a non-exclusive (same modules can be offered else where, even offered for free) marketplace for programmers to offer their modules to other developers, so that duplication of programming effort (modules) can be reduced. Any derivative software product created from these Copute.com modules will pay the greater of 1% of gross sales, or 10% of salaries[1] to develop, that derivative software product. Copute.com will distribute these royalties to module owners (after deducting Copute.com's fee, perhaps 1% of the royalties) proportionally based on an automated statistically sampled profiling of the derivative software in use to determine the percent of time the CPU spends in each module.

Copute.com won't have a monopoly, because any other site can offer a similar marketplace for Copute modules (or even modules of any computer language, although the Copute language will be best for reusing modules). This marketplace is to make modules fungible and the importance of this is discussed here. Note even though modules might be offered for free else where, if the derivative software uses even one module from Copute.com that is not free else where, then they will pay the same royalty to Copute.com. Thus the freedom of offering non-exclusivity, does not render Copute.com's business model impotent. In fact, it adds inertia and of course some module owners will not want to offer their modules for free else where (they might offer them else where not for free, which again would not hurt Copute.com's business model, but rather add inertia to it).

Module Improvement Ownership

But who owns the module when someone other than the original owner improves it (bug fix, refinement, etc)? I can't think of a metric of value for such improvements to modules. The best idea I have so far is to have a very flexible versioning scheme, give minimally 1% of "remaining value" for each fix, and give the option for existing owners of a module to award an increase to that minimum. Let me explain.

A module is created and offered by the owner(s) on Copute.com. This is open source, meaning anyone could offer an improvement. So how do we assign the ownership percentage of the improved version of the module? On the first improvement, the original owner has 100% share of ownership, so we minimally and automatically award 1% of that to the submitter of the improved version. The original owner has the option of login to his account on Copute.com and increase that minimum award (only the existing owners will best have the knowledge to evaluate an improvement and decide its value). So then there are two owners, minimally the split is 99% and 1%. Then a second improvement is made, so minimally 1% of 99% is automatically awarded, which is 0.99%. And this 1% is split proportionally so the first owner has 98.02%, second has 0.99%, and third has 0.99%. Either of the two prior owners can optionally award more, giving up some of their percent. Eventually for example, it may reach where original owner has say 50%, and each improvement owner has (minimally) 0.5%. The point is that future improvements slow down the erosion of prior ownership, unless the prior owners choose to award higher value. This is desirable because of the law of diminishing returns (80/20 rule).

Note that projects (e.g. Linux, Firefox, etc) tend to have 1000s of bug fixes and improvements, thus the original creators would be diluted to near 0% on a project-scale granularity if this model was applied, but within a project, the modules typically only require dozens of fixes/improvements, so this model encourages original owners to create smaller (more granular, thus more) reusable modules. So we see the benefit of smaller things and reusability scales economically well; whereas, project-level granularity does not (can not be fungible).

Also note that the above concept is economic ownership, i.e. the percentage of royalties, not control. If a module is released on Copute.com marketplace, the control is relinquished to the terms of the license which allows unlimited modifications and forks but requires them to reside on the Copute.com marketplace, not even Copute.com has any centralized decision control. The original owner of the module might release the original module else where with different licensing terms (Copute.com marketplace is non-exclusive), but that owner can not release the improvements else where and neither can the submitter of the improvement release the improvement else where (unless making the improvement to a identical fork in a different marketplace of the same module). So we see that Copute.com's forks potentially become unique from the forks in other marketplaces, unless all submitters of improvements submit to all marketplaces.

Versioning and Forking

Copute language, and thus the complementary (and orthogonal) marketplace on Copute.com, is all about freedom-of-choice. Thus we don't want to limit in any way. So submitters of improvements should be free to omit selective prior improvements, i.e. to create a new fork in the version trail of the module. I have an idea for a version numbering scheme that accommodates this with explicit enumeration of omissions. A version number will be numbers separated by periods, e.g. "1.0.1", where each period identifies a fork. So the first version of a module is simply "1". If an improvement is made to that module, then the version becomes "2", the next "3", etc.. If an improvement omits improvement "2" and keeps "3", then the version number is "1:3.1". If an improvement omits improvement "3" forward, then the version number is "2.1". If an improvement omits improvement "2" and keeps "3" through "5", then the version number is "1:3-5.1". If an improvement omits improvement "3" and keeps "4" through "5", then the version number is "2:4-5.1", which is equivalent shorthand for "1-2:4-5.1". The ".1" becomes a new fork and then it can also be forked, so version numbers can end up with multiple periods.

Note that when a submitter proposes to create an improvement without forking, this will initially be created as a fork, then it must be accepted by one of the owners of the trunk, in order to become a numbered as a trunk improvement instead of a fork. This is necessary so that owners don't have to create forks to indicate they don't approve of an improvement.

Note there is no automated way to precisely identify that a particular improvement actually includes and omits the improvements it says it does. Thus it is allowed to have two forks of the same of fork, and this is done using a "..n.1", e.g. "1:3..1.1" and "1:3..2.1" would be an alternative forks of "1:3.1".

Note this proposed module version numbering scheme bears almost no correlation of semantics to existing project version numbering schemes that also employ periods.

So which version will people use if there are multiple forks (which tip of which branch of a metaphorical tree)? They will use which ever fork they think best fits their needs. The popularity of use of forks in derivative software, will be a vote on the fitness, i.e. the free market will anneal the answer. The module owner(s) may endorse a particular fork by making improvements in it. This is superior to the current open source model where forking is highly discouraged and the project owners (current languages don't support module fine granularity of reuse) are (hopefully "benevolent") dictators "for life" (Linus Torvalds and others have been referred to with the quoted title).

[1] Salaries are those of the company developing the derivative software product, not any salaries that were paid to develop the modules that are offered on Copute.com.

Please note that this is a thought experiment and it may not be implemented for Copute. There is ongoing thinking on how to obtain the best free market pricing model. Preferably it should be entirely decentralized.

Some private writings solved most of the issues in the Copute.com economic model. The remaining issue is modifications to existing modules.

Summary of Economic Model

First a brief summary of prior two posts. Copute will be a new open source (free) computer language that fosters reusability of modules of programming (to finer granularity of module size than with other languages). Any one can use Copute for free, even modify it, and there is no requirement to use the Copute.com marketplace when using the Copute language. In addition, Copute.com will offer a non-exclusive (same modules can be offered else where, even offered for free) marketplace for programmers to offer their modules to other developers, so that duplication of programming effort (modules) can be reduced. Any derivative software product created from these Copute.com modules will pay the greater of 1% of gross sales, or 10% of salaries[1] to develop, that derivative software product. Copute.com will distribute these royalties to module owners (after deducting Copute.com's fee, perhaps 1% of the royalties) proportionally based on an automated statistically sampled profiling of the derivative software in use to determine the percent of time the CPU spends in each module.

Copute.com won't have a monopoly, because any other site can offer a similar marketplace for Copute modules (or even modules of any computer language, although the Copute language will be best for reusing modules). This marketplace is to make modules fungible and the importance of this is discussed here. Note even though modules might be offered for free else where, if the derivative software uses even one module from Copute.com that is not free else where, then they will pay the same royalty to Copute.com. Thus the freedom of offering non-exclusivity, does not render Copute.com's business model impotent. In fact, it adds inertia and of course some module owners will not want to offer their modules for free else where (they might offer them else where not for free, which again would not hurt Copute.com's business model, but rather add inertia to it).

Module Improvement Ownership

But who owns the module when someone other than the original owner improves it (bug fix, refinement, etc)? I can't think of a metric of value for such improvements to modules. The best idea I have so far is to have a very flexible versioning scheme, give minimally 1% of "remaining value" for each fix, and give the option for existing owners of a module to award an increase to that minimum. Let me explain.

A module is created and offered by the owner(s) on Copute.com. This is open source, meaning anyone could offer an improvement. So how do we assign the ownership percentage of the improved version of the module? On the first improvement, the original owner has 100% share of ownership, so we minimally and automatically award 1% of that to the submitter of the improved version. The original owner has the option of login to his account on Copute.com and increase that minimum award (only the existing owners will best have the knowledge to evaluate an improvement and decide its value). So then there are two owners, minimally the split is 99% and 1%. Then a second improvement is made, so minimally 1% of 99% is automatically awarded, which is 0.99%. And this 1% is split proportionally so the first owner has 98.02%, second has 0.99%, and third has 0.99%. Either of the two prior owners can optionally award more, giving up some of their percent. Eventually for example, it may reach where original owner has say 50%, and each improvement owner has (minimally) 0.5%. The point is that future improvements slow down the erosion of prior ownership, unless the prior owners choose to award higher value. This is desirable because of the law of diminishing returns (80/20 rule).

Note that projects (e.g. Linux, Firefox, etc) tend to have 1000s of bug fixes and improvements, thus the original creators would be diluted to near 0% on a project-scale granularity if this model was applied, but within a project, the modules typically only require dozens of fixes/improvements, so this model encourages original owners to create smaller (more granular, thus more) reusable modules. So we see the benefit of smaller things and reusability scales economically well; whereas, project-level granularity does not (can not be fungible).